How the Reaction-Wheel Unicycle Works

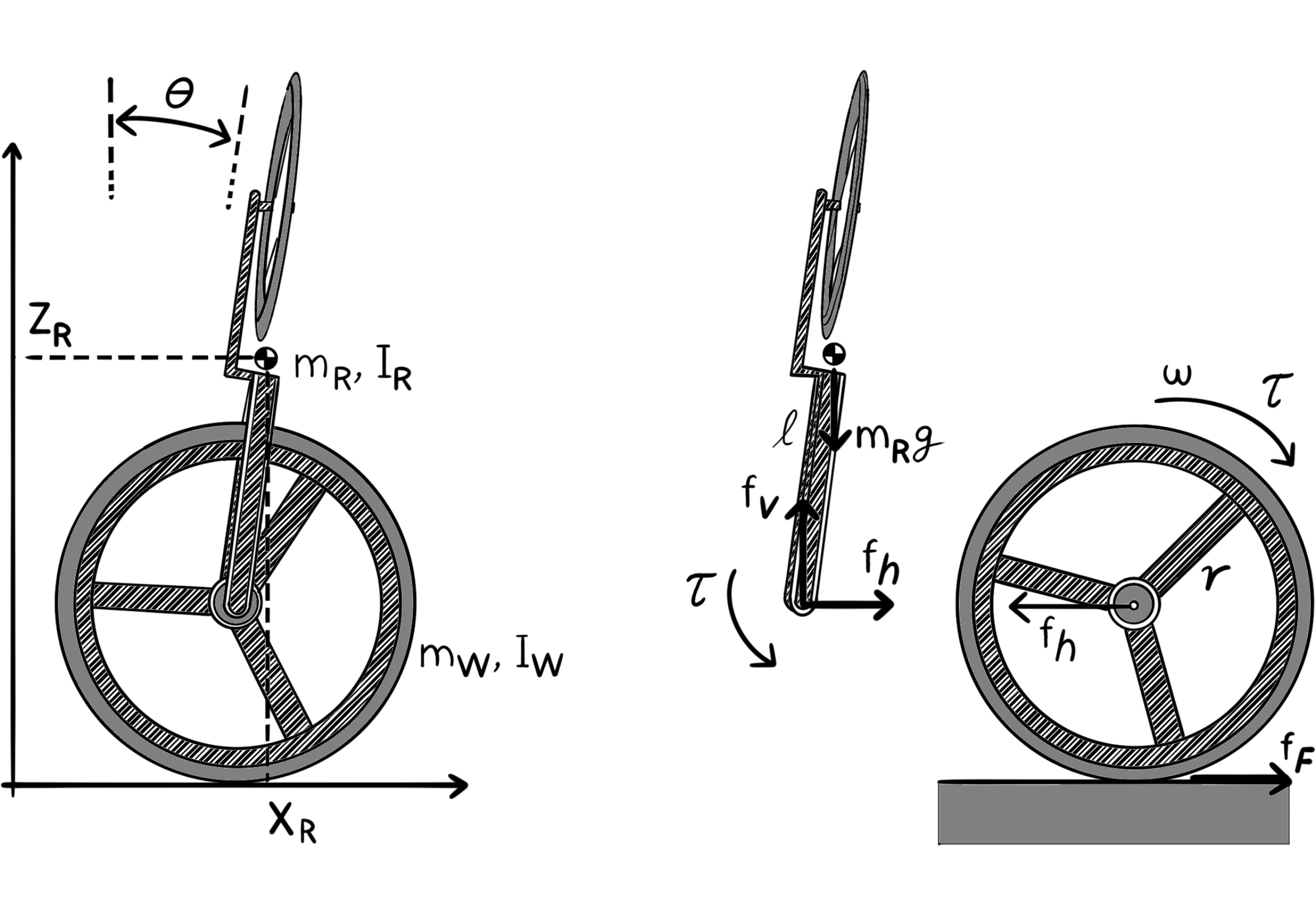

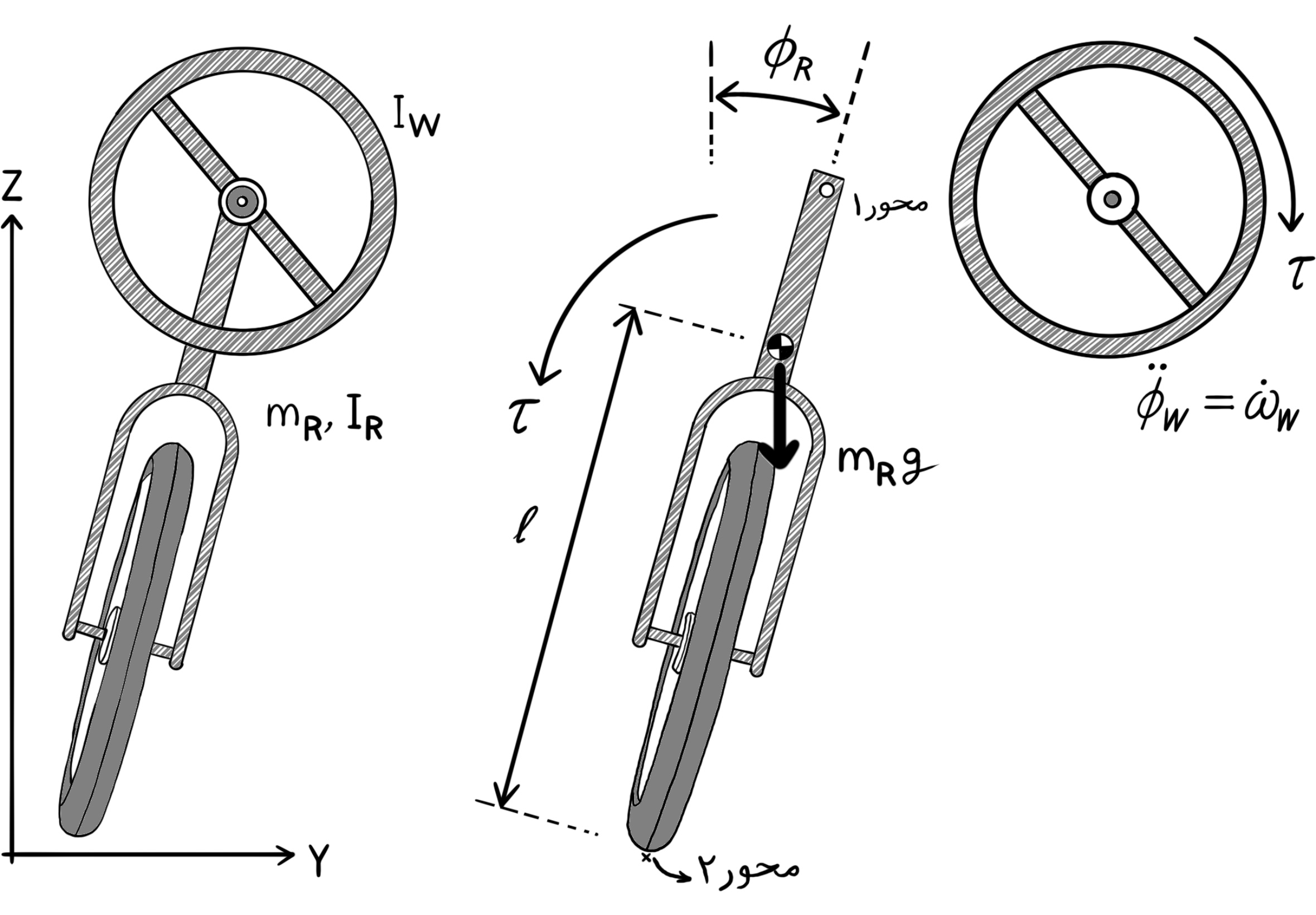

This is a model of a unicycle with two symmetrically attached rotors. One of the reasons the matrix of inertia is not trivial is that the rotors’ axes of rotation do not intersect at a point. The constraint on the system is conservation of angular momentum. The angular velocity of the body is related to the rotor velocities. That relation gives rise to a differential equation in the rotation group Special Orthogonal of real dimension 3 for the robot’s body. Using the Euler parameters of $SO(3)$ we obtain a local coordinate description of the differential equation, in terms of the roll, pitch and yaw angles. The robot can be repositioned by controlling the rotor velocities. The Linear Quadratic Regulator regulates the roll and pitch angles by a choice of a suitable input.

The Control of the Balancing Unicycle

Here we deal with non-linear control systems that are described in terms of either differential equations or difference equations. In other words we consider the follwoing two types:

Differential equations: $\left\{ \begin{array}{l} \dot{x}(t) = f(x(t), u(t)) &\\ y(t) = h(x(t), u(t)) \end{array} \right.$

Difference equations: $\left\{ \begin{array}{l} x(k + 1) = f(x(k), u(k)) &\\ y(k) = h(x(k), u(k)) \end{array} \right.$

The variable $x$ denotes the system state, the variable $u$ denotes the control input to the system, and finally, the $y$ variable denotes the output of the system. In the following, we explain how the system works through a robotics application. In this section, we see how the system works. But in the next section, we build a regulator to control the system state by generating suitable inputs for a given time frame.

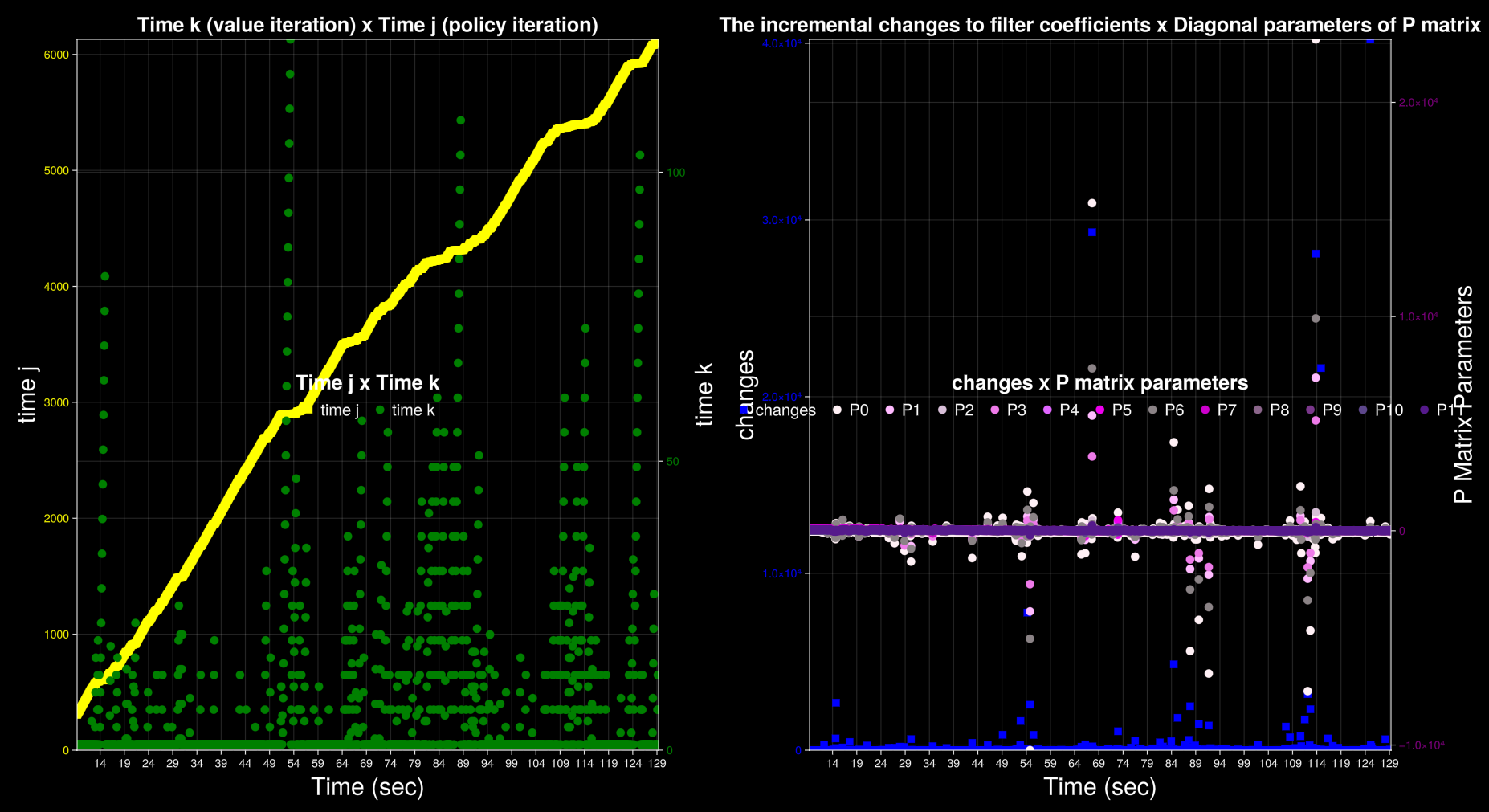

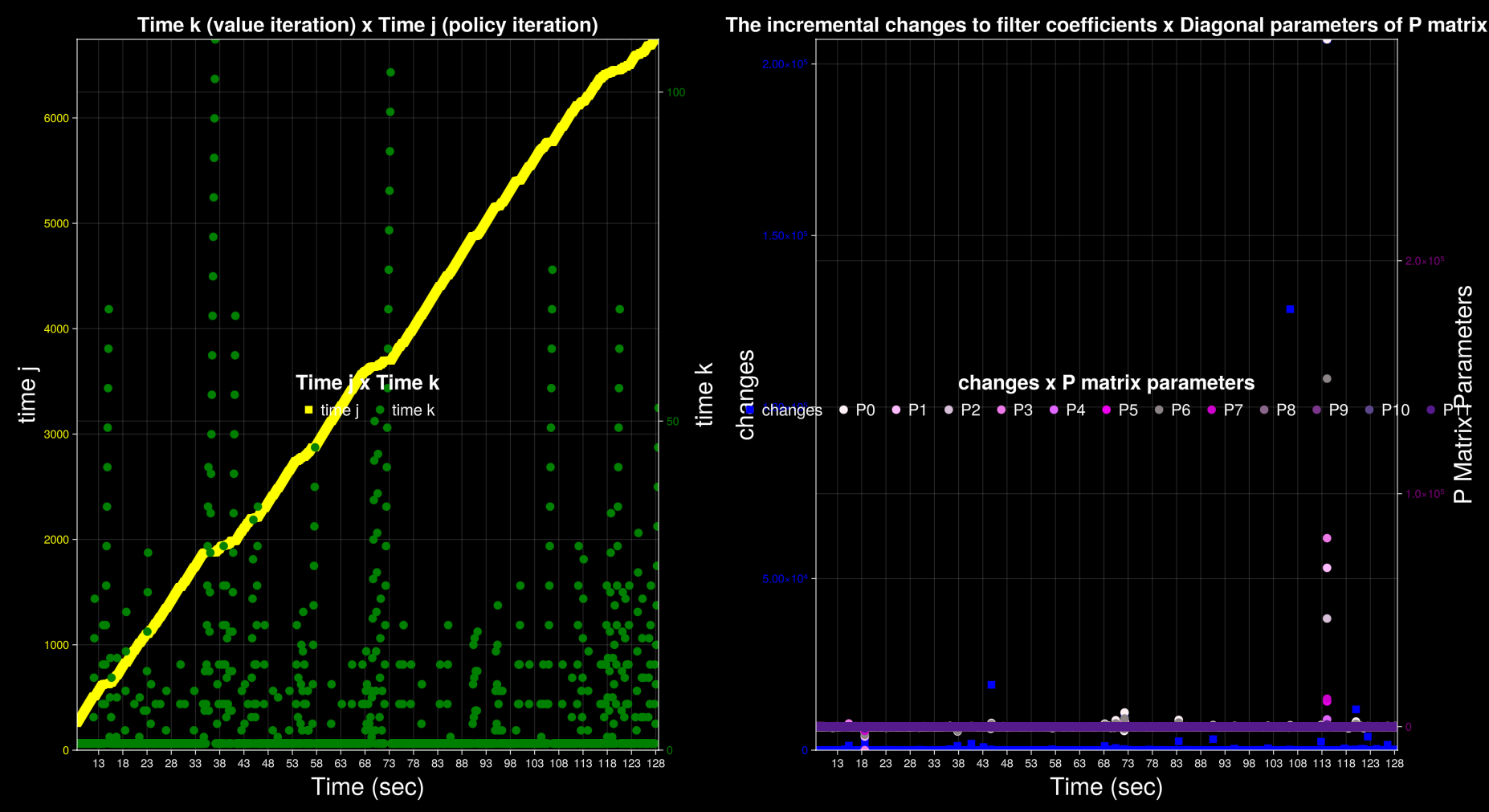

The policy function produces the control inputs $u(t)$ (or $u(k)$ in the descrete case). The feeadback policy is called optimal control whenever it makes the system state $x$ approximately equal to zero as the result of its application. The feeback policy is able to adapt through periodic policy updates. Between each two consecutive policy updates, a recursive relation updates filter coefficients, blocks of which are used to update the policy function. The policy update loop is slower whereas the filter coefficients update loop is faster.

The function $h$, which produces the output $y$, and the quality $Q(x, u)$ are similar functions. The similarity of $h(x, u)$ and the quality function $Q(x, u)$ is first because both have the same parameter signature that includes the statem state vector $x$ and the input vector $u$. Second, because both $h$ and $Q$ produce verifiable statements about the value of the ststem state at the next time step $k + 1$ or $t$ in the immediate future. Later in the next section, we see how a least squares relation can be used to calculate a difference equation between the desired state and the measured state, as a feedback signal for enhancing the quality function $Q$ and producing accurate outputs $y$ over time.

On one hand, the filter coefficients play the role of a critic that evaluates the quality of being in state $x$ and having taken input $u$. On the other hand, the policy plays the role of an actor that uses the system state $x$ as input to a matrix-vector product that produces the feedback policy $u$. The Actor/Critic architechture uses the first principles of reinforcement learning for adapting the optimal control inputs. The adpativeness of the controller increases the probability that the quality of the system state $x$ is measured higher as the time variable $t$ in differential equations (or $k$ in the case of difference equations) progresses forward in time.

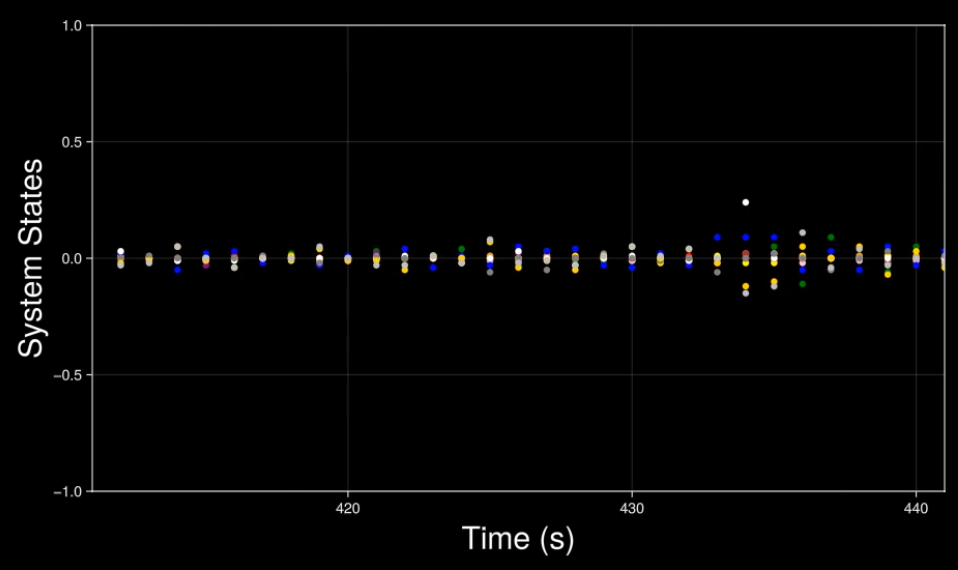

System states in real time. Even though the matrix of inertia (among other physical parameters) is unknown, the adaptive controller based on value iteration keeps the states stable and regulates them to zero.

Example

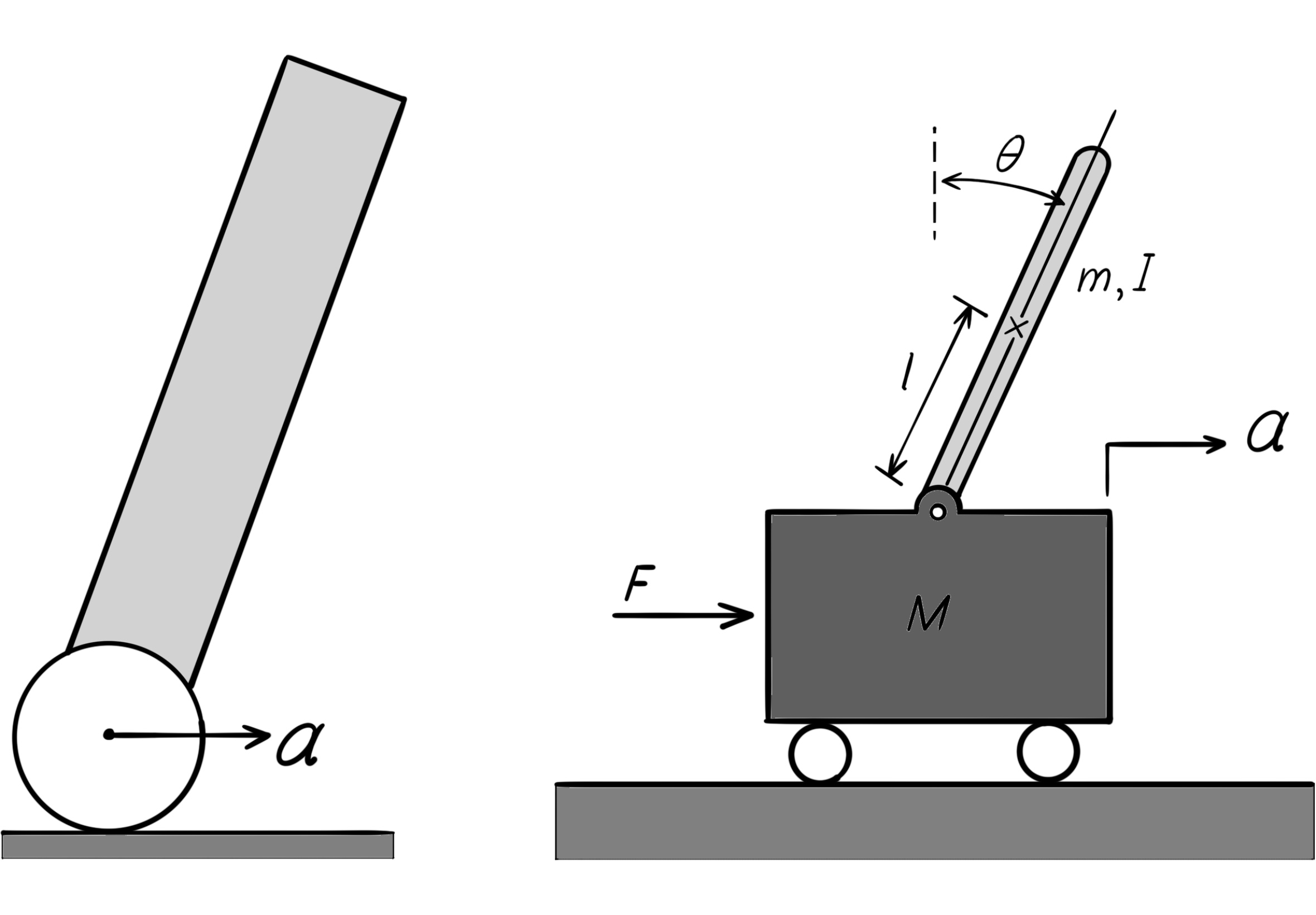

The robot that we build here is a self-balancing reaction wheel unicycle. As it is known from the name, this robot has only one wheel and therefore has one contact point with the ground surface. Just one contact point is the reason it is more complex compared to two-wheel balance robots. In fact, the robot has to preserve it balance in two directions around two perpendicular axes. In this robot, the motion in the forward/backward direction is like balancing a two-wheel balance robots, and so it obeys the same laws of physics. But since the robot has no way of moving to either left or right, in order to balance along the left/right direction we have to use another torque generator. For that purpose, we use a rotating mass, which is also called a reaction wheel. This rotating mass, which is mounted at the top of the robot, works based on the physical principle that if we apply a torque on it until it starts moving, then that mass also applies a torque on the robot's chassis, as large as the torque that is applied to it. One of the physical principles called the preservation of angular momentum explains this phenomenon. According to this principle, the sum of the angular momenta of a rotating set around a specific axis remains constant, unless it is acted upon by an external torque. So if one of the parts of the rotating set starts rotating using its internal torque, then the complement of the part (the other parts of the set) start rotating in the opposite direction in order to neutralize the internal motion of that part. Otherwise the angular momentum of the entire set will not be preserved. Using this reaction torque we can take control of the angle of the robot's body in the left and right directions.

The one-wheel balance robot may not seem so practical at first, but similar to two-wheel balance robots, or different kinds of the inverted pendulum, provides proper conditions for experimenting with various control algorithms. In addition, the most important part of the robot, the reaction wheel has a special application in satellites. After a satellite is placed into orbit around a celestial body, the only force acting on it is caused by the gravitational field. Therefore, it will have no control over its own motion. In order for the satellite to make small maneuvres along its path, or to be able to make small adjustments to its orbit, usually they equip it with three motion systems: the propulsion motor, the electromagnetic torque generator, or the reaction wheel. The first item is outside of the scope of this project. The reaction wheel is applied in satellites by rotating the wheel in the opposite direction for as much as needed for pointing at a specific direction. The amount of rotation is determined based on the ratio of the rotational momenta between the satellite and the wheel. To control the rotation of the satellite in all spatial directions, it is equipped with three wheels that are mounted in mutually perpendicular directions. Professional motorcyclists also take advantage of this property of the preservation of rotational inertia. Whenever a motorcyclist makes a jump and is detatched from the Earth, The only force acting on in that moment is the gravity of the Earth, which is outside of the control of the cyclist. In this situation, the cyclist can set its landing angle (attack vector) by accelerating the rear wheel or decelerating (braking). The effect of the reaction of the rear wheel that is applied to the body of the motorcycle, rotates the whole system in the upward or downward direction. To build this robot, it is required to become familiar with the mechanical structure, mathematical modelling, control algorithms, digital design and electronic circuits.

The Z-Euler Angle Is Not Observable

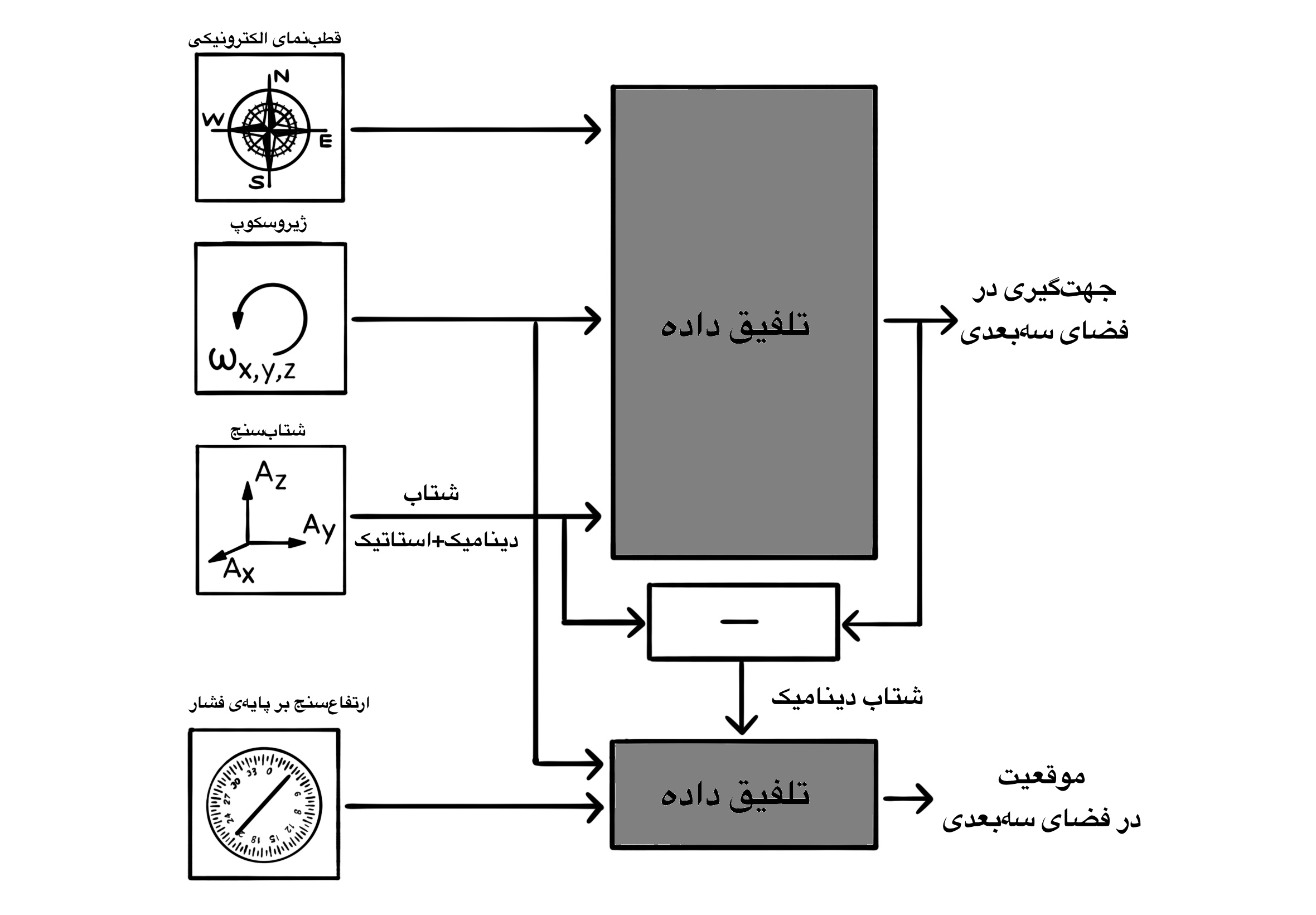

The control and navigation of mobile robots is not pissible without knowing the position. For positioning, various sensors have been designed and built, which are used based on their specific applications. Among positioning systems one can name a list: accurate accelerometrs used in rockets, existing gyroscopes in flying machines, altimeters, navigation systems based on the magnetic field of the Earth, or even more advanced navigation systems based on the images of stars that are used in satellites and spacecrafts.

Nowadays, the electromechanical sensors are manufactured in small scales (micrometers). This technology is known by the name of Micro-Electro-Mechanical Systems (MEMS). The birth of the MEMS technology has had a great effect on the price, size and the improved precision of different kinds of electronic sensors in the market. This subject makes it possible to use a multiple of such sensors in a small robot. In some cases, manufacturers offer multiple sensors of different types in a unit microchip package.

Among positioning peripherals, sensors that measure the acceleration, the rotational velocity (gyroscopes), and the magnetic field are the most applicable in small self-driving robots. This section focuses on the accelerometer and the gyroscope sensors. Using accelerometers you can calculate the accleration of your robot, along with its velocity and position through integration. You should know that the gravitational field of the Earth has an effect on the measurements of an accelerometer. This issue makes it harder to find the position, but it is useful for measuring the deviation from the gravitational direction (the vertical line). Also, the gyroscope essentially measures the angular velocity, after which it will be possible to calculate the angular position (direction) using integration. This way, with the help of accelerometrs and gyroscopes you have the ability to measure the position and orientation of the motion of your robot, and in particular the estimate of the angle of deviation from the vertical line (the direction of gravity) is possible.

Acclerometers

Every accelerometer based on Micro Electro Mechanical Systems (MEMS) has some sort of moving part inside of it, such that it moves under the influence of external forces. This part is held in place using a spring structure, and the displacement caused by the external force on it, is measured using various methods such as the change in capacitance. The Hooke's law states that the force excerted by a spring is directly proportional to the displacement caused by that force. Then, knowing the spring constant (the force per unit of distance traveled) and the mass of the part, this displacement is transformed to its equivalent acceleration. Therefore, MEMS accelerometers measure an external force excerted on the moving part. That is why these accelerometers measure the static acceleration (the Earth's gravity) and the dynamical acceleration (due to changes in velocity) the same and decomposing the two measurements is your responsibility. In this way, if the direction of the accelerometer is in the direction of the Earth's gravitational field, then the measurement value is the representation of the acceleration due to motion in addition to the gravitational acceleration ($9.8 \frac{m}{s^2}$). And if the direction of the measurement of the sensor is in the horizontal direction (perpendicular to the gravitational field of the Earth) then only the dynamical acceleration is measured and gravity will not have any effect on the measurement. So, in case a one-axis accelerometer (capable of measuring in one of the directions of the coordinate system) is used in a system, the orientation of it must be specified with respect to the gravitational direction so that the static acceleration is computable.

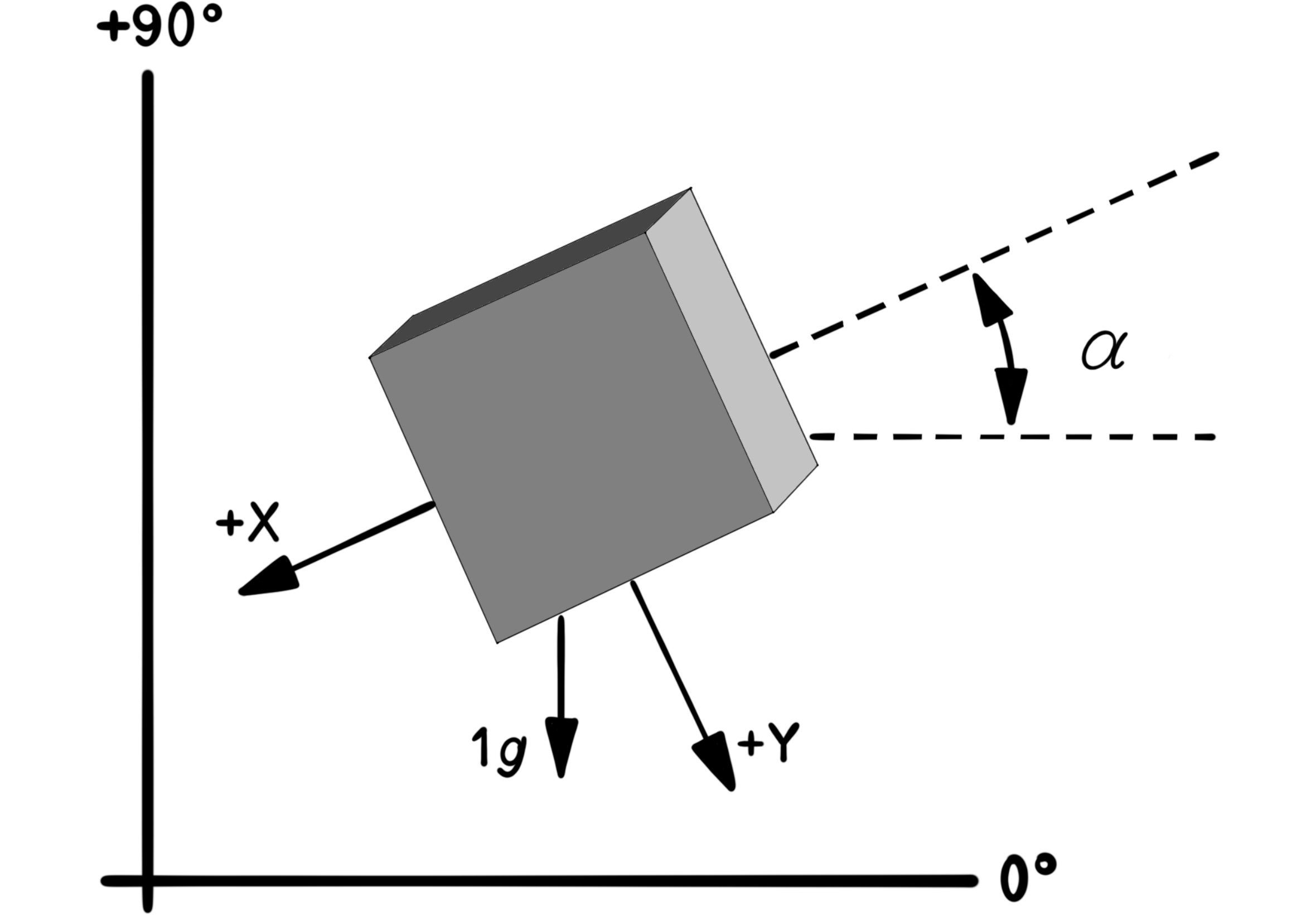

Using a two-axis accelerometer for measuring the direction of the Earth's gravity in the plane perpendicular to the Earth. In this figure, the orientation of the two-axis accelerometer (X-Y) with respect to the horizontal direction is computable using the given relation.

$\alpha = tan^{-1}(\frac{A_X}{A_Y})$

Now, imagine that you have two or three accelerometers such that their directions of measurement are mutually orthogonal (like the X, Y, and Z coordinate axes of the standard Cartesian coordinate system). If the velocity of this set is constant and only the static accelration due to gravity acts on it, by comparing the ratio of the measured accelerations across the axes, the orientation angle of the set with respect to the direction of gravity is computable. This is the way that many electronic balances and mobile robots use to measure the angle of orientation with respect to the direction of gravity.

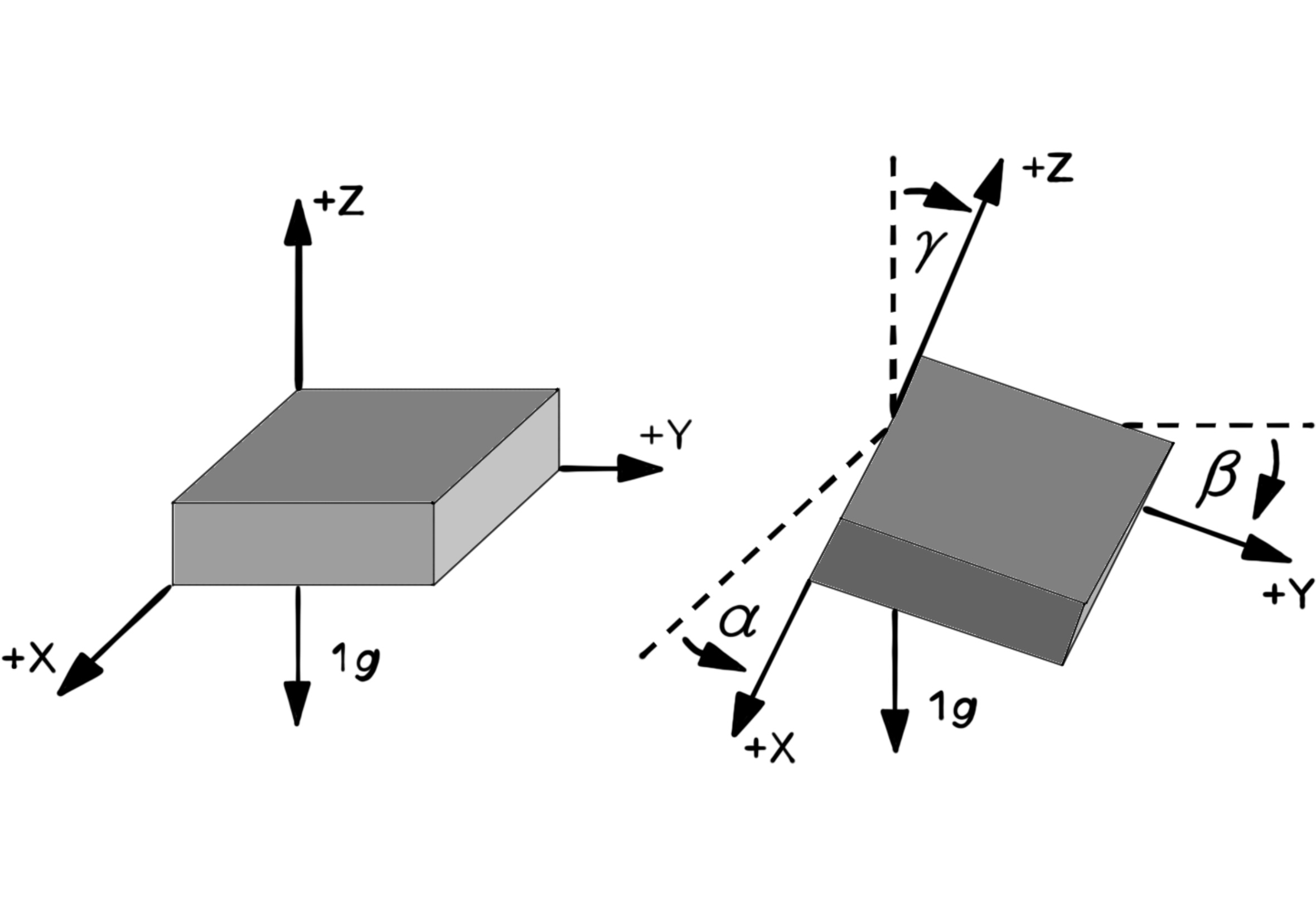

Using a tri-axis accelerometer for measuring the direction of the Earth's gravity in the three-dimensional space. In this figure, the orientation of the three-axis accelerometer (X-Y-Z) with respect to the hirozontal plane and the direction of gravity is computable using the given relations.

$\alpha = tan^{-1}(\frac{A_{X,OUT}}{\sqrt{A^2_{Y,OUT} + A^2_{Z,OUT}}})$

$\beta = tan^{-1}(\frac{A_{Y,OUT}}{\sqrt{A^2_{X,OUT} + A^2_{Z,OUT}}})$

$\gamma = tan^{-1}(\frac{\sqrt{A^2_{X,OUT} + A^2_{Y,OUT}}}{A_{Z,OUT}})$

Note that knowing the angles between each axis and the direction of gravity, does not give the general angular orientation of the accelerometer in the three-dimensional space. In fact, if this accelerometer is rotated about an axis parallel to gravity, all three axes will measure the same result compared to before the rotation. The reason for the Z-Euler angle not being observable is that the projection of the gravity vector onto the horizontal plane looks like a point (approximately the zero vector). In order to determine the angular orientation of the accelerometer in a complete way, it needs to measure at least two known vectors that are not parallel with respect to each other (the gravity vector and another vector). In every case, using a three-axis accelerometer one can build an electronic balance that can measure tilt in two mutually perpendicular directions.

Many manufacturers, make two-axis and tri-axis accelerometers as one chip, where two and three accelerometers (respectively) are placed in perpendicular directions in a unit package.

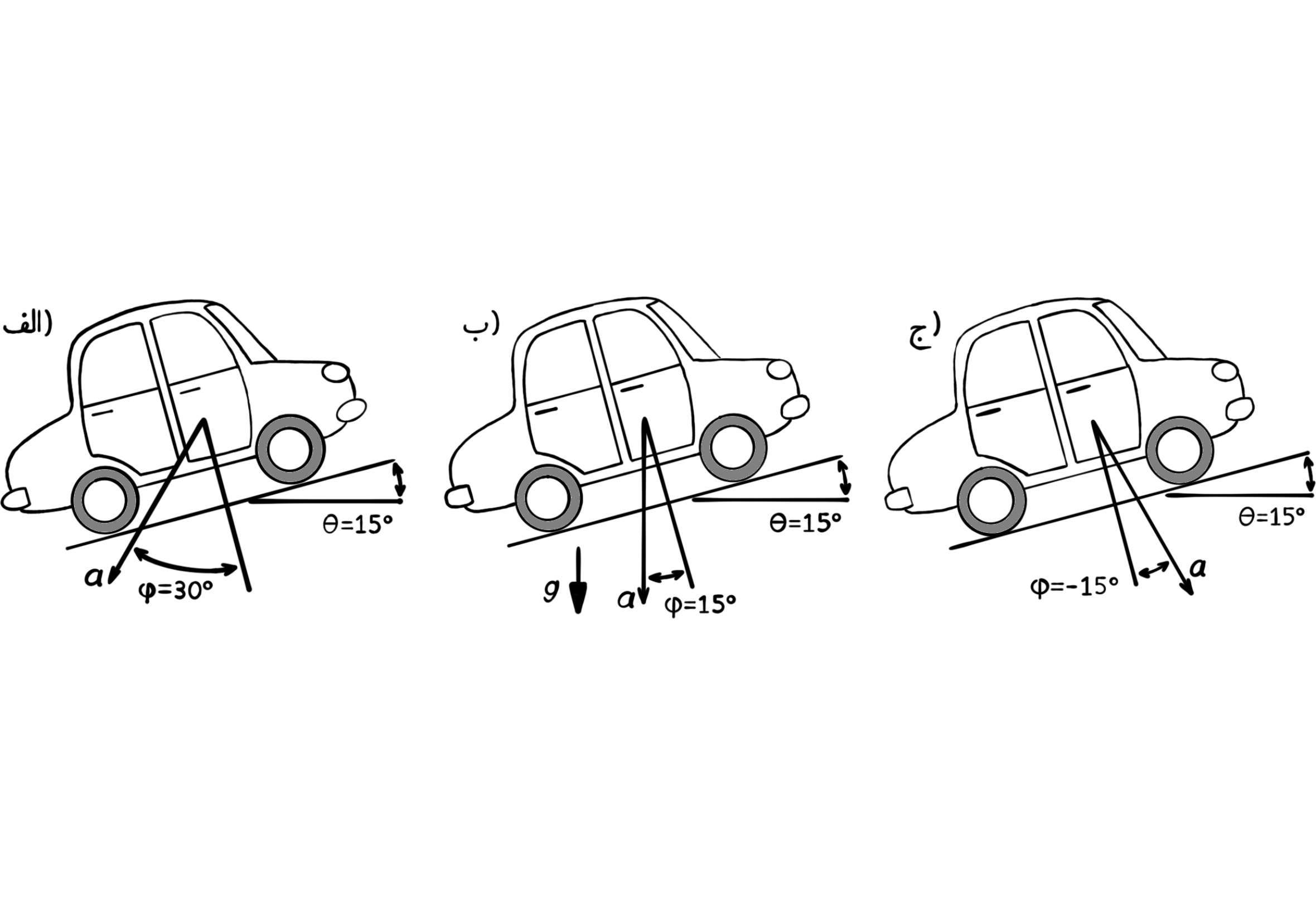

One of the fundamental problems of using accelerometrs to measure deviation, is the effect of dynamical accelerations (caused by changes in velocity) on the measurement of direction. For example, if you install such a device on a car and want to measure the slope of the road, the measured direction is correct as long as the vehicle has constant velocity. But when the car's velocity changes, the vector of dynamical acceleration is added to the vector of static acceleration and your measuring device measures the direction of this new vector (which is different from the direction of the Earth's gravity). One other disadvantage of accelerometers is the sensitivity to vibrations and the production of noisy results.

Estimating the slope of the road through measuring the direction of gravity using the accelerometer that is embedded in the car. In (a) the car has positive acceleration (increasing velocity), and in (b) without acceleration (constant velocity), and in (c) the car has negative acceleration (braking). As you can see, only in the figure (b) the direction of gravitational acceleration and the slope of the road are measured correctly.

Sensitivity to vibrations and dependence on dynamical accelerations, make it necessary to get help from other sensors such as gyroscopes and the magnetic field sensor (the electronic compass) for measuring the direction of the Earth's gravity.

To choose an accelerometer, one should pay attention to the measurement range, the sampling rate, the interface (the analog or digital communication protocol) and also the number of axes that are needed in the project (the number dimensions). Other parameters that should be considered in MEMS accelerometers are sensitivity to temperature and supply voltage changes, and the existence of an initial offset (the value read at zero acceleration), which is corrected with calbration. Here, we show how to use three-axis accelerometers.

Gyroscopes

As you know, a gyroscope essentially measures the angular velocity about an axis. A rotation about an axis is measured with a specific value (often in terms of degrees per second $\frac{deg}{s}$) and a rotation in the opposite direction results in a value with the opposite sign, and in the case where the rotation is stopped, the value of zero is measured. Mechanical gyroscopes that work based on coriolis forces / the coriolis effect of a rotating mass, had been used in airplanes and rockets for a while until optical gyroscopes and various kinds of MEMS were built. Among gyroscopes, optical gyroscopes are the most accurate, whereas the MEMS gyroscopes are the cheapest and the most applied type of this measuring device.

Unlike accelerometers, a gyroscope is not generally sensitive to vibrations and produces more continuous measurement results. But, since the angular velocity is not that useful on its own, and the angular orientation is more useful to mobile vehicles, the output of this sensor is integrated to extract the angular position. Having an integrator in a positioning system based on gyroscopes causes the accumulation of the smallest offsets and unavoidable permanent errors over time to produce greater errors. As such, the angular position computed by the integrator using the output of the gyroscope drifts away from the real value over time, so much that after the passgae of a few minutes (or even a few seconds) the computed values are no longer valid. This issue forces the use of other sensors such as a detector of the Earth's magnetic field or an acclerometer along with the gyroscope, unless the goal of measurement is only the angular velocity and not the angular position, in which case the integrator is removed and the output of the gyroscope will be accurate enough.

Like MESMS accelerometers, MEMS gyroscopes are manufactured in small sizes with afforable prices, and many manufacturers provide two or three gyroscopes in a single electronic package for measurements along different directions that are perpendicular with respect to one another.

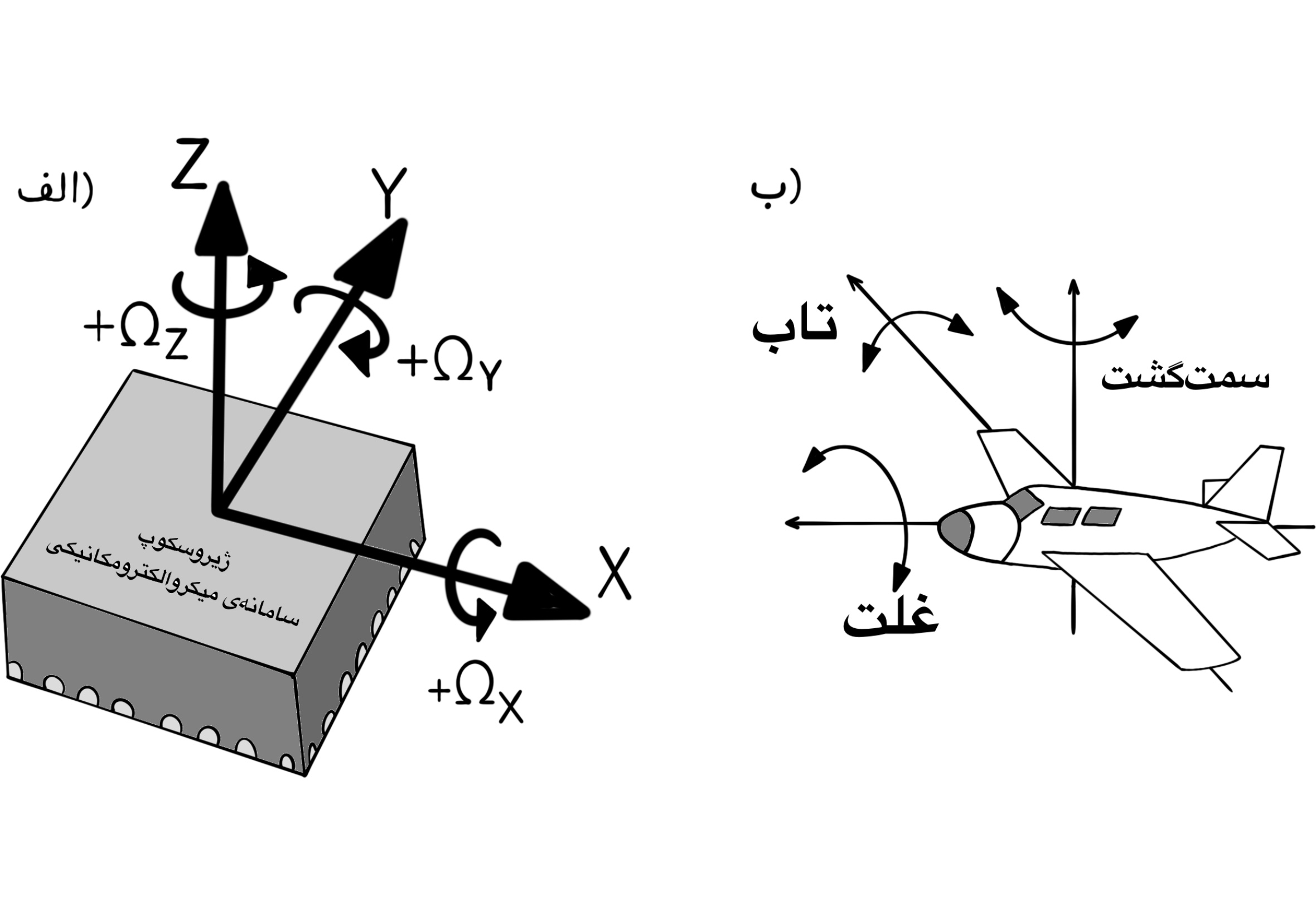

A three-axis gyroscope measures the angular velocity about three mutually perpenducular axes (X, Y and Z). Ususally, the right-handed rotation about each axis is denoted by the positive sign and the left-handed rotation is denoted by the negative sign. The angular velocity is expressed in terms of degrees per second $\frac{deg}{s}$. In flying vehicles such as rockets and airplanes and also some mobile robots, the names Roll, Yaw and Pitch are used to label the rotation axes. The axes Roll, Yaw and Pitch are not necessarily aligned with the X, Y and Z axes and this fact depends on the assignment of the coordinate system axes to the mobile object.

When choosing a gyroscope, one should consider its measurable velocity range, the sampling rate, the way to communicate with it (analog or digital), and also the number of required axes in the project (the number of dimensions). In addition to those parameters, consider issues such as the sensitivity to temperature and supply voltage changes, the initial offset (the value read in the stationary state) and the cross-sxis sensitivity. If a gyroscope is rotated around an axis perpendicular to the measurement axis, it should measure the value zero, but it is not the case in practice. The gyroscope can show sensitivity to rotation in directions other than the one that is to be measured. This value, which must be as small as possible, represents the cross-axis sensitivity and is expressed in terms of the error percentage.

Fusing the Output Data of Accelerometers with that of Gyroscopes

In this section we want to use the data of both accelerometers and gyroscopes in order to estimate the correct direction of gravity. A tri-axis accelerometer alone can be used to find the orientation with respect to the gravity vector. However, this estimate is correct only when there are no accelerations acting on the system other than the static gravitational acceleration. This is not possible in mobile robots. On top of that, an accelerometer is very sensitive to vibrations and due to too much noise, its output does not have much value on its own. In contrast, gyroscopes have their own disadvantages, the most important of which is the gradual drifting of the calculated angle from the real value, which is calculated using integration over time. Fortunately, the errors existing in acclerometers and gyroscopes are completely different in nature, such that by using the sensors in the proper way, one can correct both errors. For an effective application of the two sensors, one must fuse their data together in a way that the fused result is more valid than the result of each sensor on its own.

Assuming that there are no long-term dynamical accelerations acting on the system, and so assuming that the direction of gravity has been calculated in a correct way, you can subtract the gravity vector of the Earth (the direction of which is known and its magnitude is approximately equal to $9.8 \frac{m}{s^2}$) from the filtered information of the accelerometer to get the dynamical acceleration of the motion. By integrating the dynamical acceleration you can calculate the velocity of motion and the position of the robot. But these results are valid short-term because of the integral operation. To prevent the accumulation of error over a long period of time, it is necessary to add another positioning sensor such as the Global Positioning System (GPS) to the set.

As you know, in a positioning system including tri-axis gyroscopes and accelerometers (having six degrees of freedom), the gravity vector calculated using the accelerometer is like a yardstick that counteracts the effect of the gradual drift of the gyroscope from the estimation of the direction of gravity. But this vector does not have any projections on the horizontal plane. In addition, knowing the direction and magnitude of a known vector (like the gravity vector) in an orthogonal coordinate system, it is not possible to determine the direction of all three coordinate axes at the same time. In fact, one can show that there are an infinite number of coordinate systems that measure a particular vector the same (if you rotate a coordinate system about an axis parallel to that particular vector, all coordinate systems that are created as a result of rotating the coordinate system through various angles about the axis, measure the said vector the same). To detect the orientation of a coordinate system in the three-dimensional space, we have to have the measurement results of a pair of non-parallel vectors in the coordinate system. The coordinate system that we want to find the orientation of, is our Inertial Measurement Unit (IMU). That is why the output of a data fusion algorithm that uses the information of tri-axis accelerometers and gyroscopes, is not a valid source to find the orientation around the vertical axis (the Z-axis). To solve this problem one must use another vector that has a substantial projection on the horizontal plane. An electronic compass can accomplish this goal. Positioning systems in flying machines generally take advantage of all three sensors: compasses, gyroscopes and accelerometers (having nine degrees of freedom), and the fusion algorithms in them process the information of all of the sensors.

Estimating Tilt Using Accelerometers

In this section, we find an estimator for the roll and pitch angles of a rigid body that has only rotational degrees of freedom. This estimate is based on the measurements of multiple accelerometers that are mounted on the rigid body, such that it is assumed that we know their mounting positions and their directions of orientation. First, we describe the roblem setup in a formal way. Then, we find an estimate of the gravity vector in the frame of the robot. Finally, we use the gravity vector in order to calculate the roll and pitch angles. In this section, we follow the setup described in Sebastian Trimpe and Raffaello D’Andrea (2010) [2].

The Problem Setup

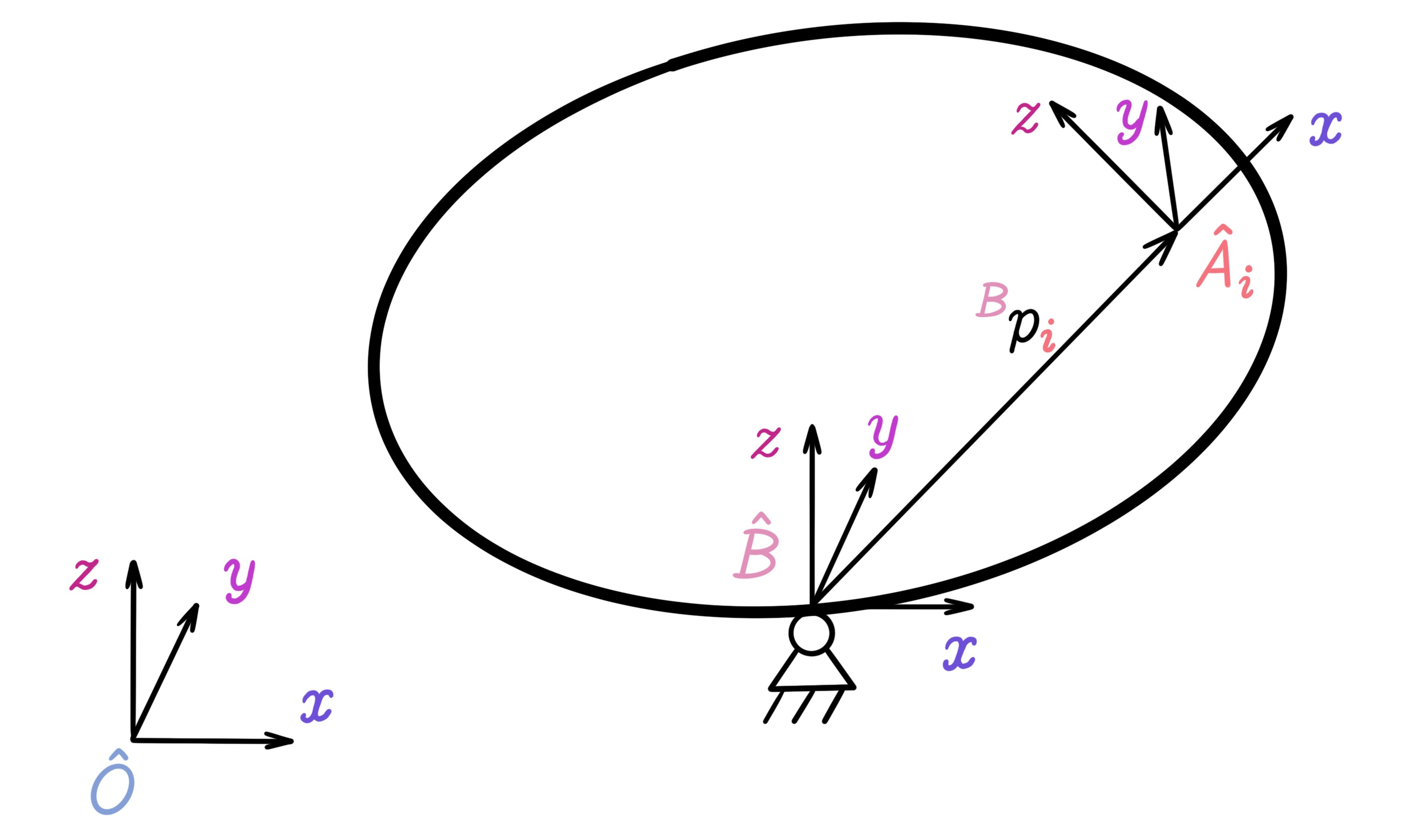

Suppose there is a rigid body that is standing on a fixed pivot point without friction. Therefore, the body has three rotational degrees of freedom, but has no translational degrees of freedom. The origin of the coordinate system of the body, which is denoted by $\hat{B}$, is at the center of rotation. The inertial reference frame is denoted by $\hat{O}$, the origin of which is coincident with the origin of the body's frame $\hat{B}$. On the body, there are $L$ sensors that are mounted at specific locations $p_i$, for $i = 1, ..., L$. It is assumed that the position of sesnors $^Bp_i$ is known in the reference frame of the body. Each sensor $\hat{A}_i$ measures the local acceleration along three directions in its local frame. Two rotation matrices are introduced to describe the rotation of the rigid body and the mounting orientaton of the sensors: the first matrix describes the rotation of the inertial reference frame $\hat{O}$ with respect to the body's frame $\hat{B}$, and the second matrix determines the rotation of the $i$th sensor's local reference frame with respect to the body's frame.

$\left\{ \begin{array}{l} ^{O}_{B}R &\\ ^{A_i}_{B}R \end{array} \right.$

For example, a vector $^Bv$ in the body's reference frame can be represented in the inertial reference frame with a matrix-vector multiplication $^{O}v = ^{O}_{B}R \ ^{B}v$.

An accelerometer, measures $^{A_i}m_i$ the acceleration vector $^O\ddot{p}_i$ at its mounting position in addition to the gravity vector $^Og$, which is rotated with respect to its local frame $\hat{A}_i$.

$^{A_i}m_i = ^{A_i}_{B}R \ ^{B}_{O}R (^{O}\ddot{p}_i + ^{O}g) + ^{A_i}n_i$ (Equation 1)

In equation 1, the measurement of the accelerometer is denoted by $^{A_i}m_i \in \mathbb{R}^3$ and the measurement noise is denoted by $^{A_i}n_i \in \mathbb{R}^3$. It is assumed that it is the white noise, and its value $\mathbb{E}[^{A}n_i \ (^{A}n_i)^T] = \sigma_n^2 I_3$ is limited by the zero mean $\mathbb{E}[^{A}n_i] = 0$ and the standard deviation $\sigma_n$. Here, the mathematical expected value is denoted by $\mathbb{E}$ and the matrix $I_3 \in \mathbb{R}^{3 \times 3}$ is the identity matrix of real dimension three. This model of noise is reasonable for acclerometers that are based on the MEMS technology provided that the bias is subtracted from it.

Using the coordinate transformation $^Op_i = ^O_BR ^Bp_i$ and the fact that $^Bp_i$ is constant in time $\frac{d \ ^{B}p_i}{dt} = 0$, we arrive at the conclusion that the acceleration $^O\ddot{p_i}$ in terms of the inertial reference frame $\hat{O}$ is calculated using the second derivative of the rotation matrix $^O_B\ddot{R}$ with respect to time $\frac{d^2 \ ^{O}_{B}R}{dt^2} = ^{O}_{B}\ddot{R}$. The matrix $^O_B\ddot{R}$ captures the dynamic terms of the rigid body: the rotational and centripetal acceleration terms.

$^{O}\ddot{p}_i = ^{O}_{B}\ddot{R} \ ^{B}p_i$ (Equation 2)

Using equation 2, one can rewrite the acceleration measurement in equation 1 as follows:

$^{A_i}m_i = ^{A_i}_{B}R \ ^{B}_{O}R (^{O}_{B}\ddot{R} \ ^{B}p_i + ^{O}g) + ^{A_i}n_i$ (Equation 3)

Since all of the rotational orientations of the sensors, denoted by $^{A_i}_BR$, are assumed to be known, we can represent the sensor measurements $^{A_i}m_i$ in terms of the body's frame of reference $\hat{B}$ by multiplying equation 3 on the left with the transpose of the rotation matrix $^B_{A_i}R = ^{A_i}_BR^T$.

$^{B}m_i = \tilde{R} ^{B}p_i + ^{B}g + ^{B}n_i$ (Equation 4)

In equation 4, the matrix $\tilde{R}$ combines the rotation of the body $^{B}_{O}R$ and the dynamic terms of the motion of the body $^{O}_{B}\ddot{R}$ using a matrix-matrix product: $\tilde{R} := ^{B}_{O}R \ ^{O}_{B}\ddot{R}$.

$^{B}g = ^{B}_{O}R \ ^{O}g$ (Equation 5)

Also in equation 4, the gravity vector $^{B}g$ is represented in terms of the body's reference fame $\hat{B}$, and the noise vector $^{B}n_i$ is rotated with respect to the body's frame $^{B}n_i = ^{B}_{A_i}R \ ^{A_i}n_i$. The mean of the noise after the coordinate transformation is still equal to zero $\mathbb{E}[^{B}n_i] = 0$, and the standard deviation of the noise is still limited to the scalar multiplication of $\sigma_n^2$ with the three-dimensional identity matrix: $\mathbb{E}[^{B}n_i (^{B}n_i)^T] = \sigma_n^2 I_3$.

Suppose that the measurements are done at a rate $T$ and the time index is introduced as $k$. So we can rewrite equation 4 like the following:

$^{B}m_i(k) = \tilde{R}(k) \ ^{B}p_i + ^{B}g(k) + ^{B}n_i(k)$ (Equation 6)

With the given measurements that are done in equation 6 for every sensor from $i$ through $L$ at time $k$, the objective is to estimate the tilt of the rigid body at time $k$, which is captured by the matrix $^B_OR$. As an intermediate step, an estimate of the gravity vector $^Bg(k)$ at time $k$ (and as a side product) an estimate of the matrix $\tilde{R}(k)$ is derived. Then, the estimate of the gravity vector is used to find the roll and pitch angles of the rigid body.

The Optimal Estimation of the Gravity Vector

In this section, the problem of the estimation of the gravity vector $^Bg$ in the reference frame of the robot's body $\hat{B}$ using the acceleration measurements $^Bm_i$ in equation 6, for $i = 1, ..., L$, is described as a least-squares problem. All of the measurements in equation 6, which are $L$ in number, are combined in a matrix equation where the time index $k$ is removed for convenience.

$M = QP + N$ (Equation 7)

In equation 7, the matrix denoted by $M$ combines the measurements of all sensors, $Q$ denotes the matrix of unknown parameters, whereas $P$ denotes the matrix of known parameters.

$M := \begin{bmatrix} ^{B}m_1 & ^{B}m_2 & \ldots & ^{B}m_L \end{bmatrix} \in \mathbb{R}^{3 \times L}$ (Equation 8)

$Q := \begin{bmatrix} ^{B}g & \tilde{R} \end{bmatrix} \in \mathbb{R}^{3 \times 4}$ (Equation 9)

$P := \begin{bmatrix} 1 & 1 & \ldots & 1 \\ ^{B}p_1 & ^{B}p_2 & \ldots & ^{B}p_L \end{bmatrix} \in \mathbb{R}^{4 \times L}$ (Equation 10)

$N := \begin{bmatrix} ^{B}n_1 & ^{B}n_2 & \ldots & ^{B}n_L \end{bmatrix} \in \mathbb{R}^{3 \times L}$

The letter $N$ in equation 7 denotes the matrix that combines all of the noise vectors, meaning its expected value is equal to zero $\mathbb{E}[N] = 0$, and its standard deviation is limited by the scalar multiplication of the $L$-dimensional identity matrix $I_L$ with $\sigma_N^2 := \sqrt{3} \sigma_n$:

$\mathbb{E}[N^T N] = 3\sigma_n^2 I_L = \sigma_N^2 I_L$.

Other than the gravity vector $^Bg$, which is what we are after, the unknown parameters matrix $Q$ also contains the matrix $\tilde{R}$, combining the rotation of the body $^{B}_{O}R$ and the dynamic terms of the motion of the body $^{O}_{B}\ddot{R}$. In the follwoing, a scheme for the optimal estimation of the entire matrix $Q$ is described, even though the gravity vector motivates the tilt estimation. In other applications, one might also be interested in estimating the dynamic terms, denoted by $\ddot{R}$, which is derived from the matrix $\tilde{R} = ^{B}_{O}R \ ^{O}_{B}\ddot{R}$ once the rotation matrix $^B_OR$ is found.

The objective is to find an estimate of the optimal matrix $\hat{Q}^*$ of the unknown parameters matrix $Q$ such that the expected value of the optimization error is minimized (see equation 11 where $||.||_F$ denots the Frobenius matrix norm), subjected to the fact that $\mathbb{E} [\hat{Q}] = Q$ the expected value of the matrix $\hat{Q}^*$ equals the matrix $Q$.

$\underset{\hat{Q}}{min} \ \mathbb{E} \ [|| \hat{Q} - Q ||^2_F]$ (Equation 11)

The estimate of the matrix $\hat{Q}$ is restricted to linear combinations of the measurements $M$, that is, we look for an optimal matrix $X^*$ so that we can decompose the matrix $Q$ as a matrix-matrix product $\hat{Q} = MX$. This way results in a straight-forward implementation: at each time step, the estimate of the matrix $Q$ is calculated by a matrix-matrix product. Note that in equation 7, the matrices $M$, $Q$ and $N$ are variable in time. At each time $k$, according to the measurements of the matrix $M$, we want to find an optimal estimate of the unknown parameters matrix $Q$. The next lemma, states the best unbiased linear estimate of the full parameter matrix $Q$.

The Full Estimation Lemma

Given the real matrices $P \in \mathbb{R}^{4 \times L}$ and $M \in \mathbb{R}^{3 \times L}$ satisfying $M = QP + N$ with unknown matrix $Q \in \mathbb{R}^{3 \times 4}$ and the matrix random variable $N \in \mathbb{R}^{3 \times L}$ with $\mathbb{E}[N] = 0$, $\mathbb{E}[N^T N] = \sigma_N^2 I_L$. Assuming $P$ has full row rank, the (unique) minimizer $X^* \in \mathbb{R}^{L \times 4}$ of

$\underset{X}{min} \ \mathbb{E} \ [||MX - Q||_F^2]$ subjected to $\mathbb{E} \ [MX] = Q$ (Equation 12)

is given by

$X^* = P^T (PP^T)^{-1}$. (Equation 13)

The minimum estimation error is

$\mathbb{E} \ [|| MX^* - Q ||_F^2] = \sigma_N^2 \sum_{i = 1}^4 \frac{1}{s_i^2(P)}$, (Equation 14)

where $s_i(P)$ denotes the $i$th largest singular value of $P$.

The Proof of the Full Estimation Lemma

Since $\mathbb{E}[MX] = \mathbb{E}[M]X = QPX$, it is required that

$PX = I$ (Equation 15)

to satisfy $\mathbb{E}[MX] = Q$. Next, consider the singular value decomposition (SVD) of $P$,

$P = U \begin{bmatrix} \Sigma & 0 \end{bmatrix} \begin{bmatrix} V_1^T \\ V_2^T \end{bmatrix}$, (Equation 16)

with $U \in \mathbb{R}^{4 \times 4}$ unitary, $\Sigma \in \mathbb{R}^{4 \times 4}$ diagonal, $V_1 \in \mathbb{R}^{L \times 4}$, $V_2 \in \mathbb{R}^{L \times (L - 4)}$, and $V = \begin{bmatrix} V_1 & V_2 \end{bmatrix}$ unitary. From the full row rank assumption on $P$, it follows that $\Sigma$ is positive definite. Therefore, a parameterization of all $X$ that satisfy equation (15) is given by

$X = V_1 \Sigma^{-1}U^T + V_2 \bar{X}$, (Equation 17)

where $\bar{X} \in \mathbb{R}^{(L - 4) \times 4}$ is a free parameter matrix. Thus, $\bar{X}$ needs to be chosen such that equation (12) is minimized: Using equations (7), (15) and basic peoperties of the trace operation, yields

$\mathbb{E} \ [||MX - Q||_F^2] = \mathbb{E} \ [||NX||_F^2]$

$= \mathbb{E} \ [trace(X^T N^T N X)] = trace(\mathbb{E} \ [N^T N] X X^T)$

$= \sigma_N^2 \ trace(X X^T) = \sigma_N^2 \ trace(V^T X (V^T X)^T)$

$= \sigma_N^2 ||\begin{bmatrix} \Sigma^{-1} U^T \\ \bar{X} \end{bmatrix}||_F^2$, (Equation 18)

which is minimized by $\bar{X} = 0$. Therefore,

$X^* = V_1 \Sigma^{-1} U^T = P^T (P P^T)^{-1}$,

which can readily be seen by inserting equation (16) for $P$, and

$\mathbb{E}[||MX^* - Q||_F^2] = \sigma_N^2 ||\Sigma^{-1} U^T||_F^2 = \sigma_N^2 ||\Sigma^{-1}||_F^2$.

$\square$

The optimal estimate $\hat{Q} = MX^*$ includes both the optimal estimate of the gravity vector $^Bg$ and of the dynamics matrix $\tilde{R}$. Since, for tilt estimation, only the former is of interest, one needs to ask if $X^*$ is also optimal if one seeks only an estimate of parts of the unknown matrix $Q$. The following lemma states that this is indeed the case.

The Partitioned Estimation Lemma

Let the matrices $Q$, $P$, $N$ and $M$ be defined as in the full estimation lemma. Furthermore, let $Q = \begin{bmatrix} Q_1 & Q_2 \end{bmatrix}$, with

$\left\{ \begin{array}{l} Q_1 \in \mathbb{R}^{3 \times q} &\\ Q_2 \in \mathbb{R}^{3 \times (4 - q)} \end{array} \right.$

where $1 \leq q \leq 4$. Assuming $P$ has full row rank, the (unique) minimizer $Y^* \in \mathbb{R}^{L \times q}$ of

$\underset{Y}{min} \ \mathbb{E} \ [|| MY - Q_1 ||_F^2]$ subjected to $\mathbb{E} \ [MY] = Q_1$ (Equation 19)

is $Y^* = X_1^*$, where $X^* = \begin{bmatrix} X_1^* & X_2^* \end{bmatrix}$ is the solution of the full estimation lemma.

The Proof of the Partitioned Estimation Lemma

It needs to be shown that $Y = X_1^*$ satisfies equation (19). First, since $X^* = \begin{bmatrix} X_1^* & X_2^* \end{bmatrix}$ satisfies $\mathbb{E} \ [M X] = Q$ in equation (12),

$\mathbb{E} \ [ \begin{bmatrix} M X_1^* & M X_2^* \end{bmatrix} ] = \mathbb{E} \ [M X^*] = Q = \begin{bmatrix} Q_1 & Q_2 \end{bmatrix} \longrightarrow$

$\left\{ \begin{array}{l} \mathbb{E} \ [M X_1^*] = Q_1 &\\ \mathbb{E} \ [M X_2^*] = Q_2 \end{array} \right.$.

Then,

$|| M X^* - Q ||_F^2 = || \begin{bmatrix} M X_1^* - Q_1 & M X_2^* - Q_2 \end{bmatrix} ||_F^2 = || M X_1^* - Q_1 ||_F^2 + || M X_2^* - Q_2 ||_F^2$

i.e. $X^*$ minimizes both terms in the last expression separately and $X_1^*$ thus minimizes $|| M X_1^* - Q_1 ||_F^2$ alone.

$\square$

Applying the partitioned estimation lemma with $q = 1$ yields the optimal gravity vector estimate $^B\hat{g}(k)$ at time $k$ given all sensor measurements $M(k)$,

$^{B}\hat{g}(k) = M(k) X_1^*$, (Equation 20)

with $X_1^* \in \mathbb{R}^{L \times 1}$. The optimal fusion vector $X_1^*$ is static and completely defined by the geometry of the problem (through $P$) and can thus be computed offline.

Note that the gravity vector estimate in equation (20) is independent of the rigid body dynamics, which are captured in $^O_B\ddot{R}$ (and thus in $\tilde{R}$). This can be seen from

$^{B}\hat{g} = M X_1^* = Q P X_1^* + N X_1^*$

$= \begin{bmatrix} ^{B}g & \tilde{R} \end{bmatrix} \ U \Sigma V_1^T \ V_1 \Sigma^{-1} U_1^T \ + N X_1^*$

$= \begin{bmatrix} ^{B}g & \tilde{R} \end{bmatrix} \ P \ X_1^* \ + N X_1^*$

$= \begin{bmatrix} ^{B}g & \tilde{R} \end{bmatrix} \begin{bmatrix} U_1 \\ U_2 \end{bmatrix} U_1^T + N X_1^*$

$= \begin{bmatrix} ^{B}g & \tilde{R} \end{bmatrix} \begin{bmatrix} 1 \\ 0 \end{bmatrix} + N X_1^* = ^{B}g + N X_1^*$,

where the SVD of $P$ in equation (16) has been used. Clearly, the matrix $\tilde{R}$ does not appear in the estimate, i.e. the gravity vector observation is not corrupted by any dynamic terms. As expected, the sensor noise does enter the estimation equation.

The Physical Interpretation of the Full Row Rank Condition

Both in the full estimation lemma and the partitioned estimation lemma, the matrix $P$, which contains the sensor locations on the rigid body, is assumed to have full row rank. In the following, a physical interpretation of this rank condition is given.

Consider the case where $P$ does not have full row rank. Then, there exists a nontrivial linear combination of the rows of $P$,

$\exists \lambda \neq 0 \in \mathbb{R}^4 : \lambda_1 p_x + \lambda_2 p_y + \lambda_3 p_z + \lambda_4 \textbf{1} = 0$, (Equation 21)

where $p_x^T, \ p_y^T, \ p_z^T \in \mathbb{R}^{1 \times L}$, denote the last three rows of $P$ (the vectors of $x$, $y$ and $z$-coordinates of all sensor locations, respectively) and $\textbf{1}^T \in \mathbb{R}^{1 \times L}$, the vector of all ones, is the first row of $P$. Expression (21) is equivalent to

$\exists \lambda \neq 0 \in \mathbb{R}^4 : \forall i = 1, ..., L$

$\lambda_1 \ ^{B}p_{i, x} + \lambda_2 \ ^{B}p_{i, y} + \lambda_3 \ ^{B}p_{i, z} = -\lambda_4$ (Equation 22)

where $^{B}p_{i, x}, \ ^{B}p_{i, y}, \ ^{B}p_{i, z} \in \mathbb{R}$ denote the $x$, $y$ and $z$-coordinate of the $i$th sensor location in the body frame. Since the equation $\lambda_1 x + \lambda_2 y + \lambda_3 z = -\lambda_4$ defines a plane in $(x,y,z)$-space, condition (22) is equivalent to all $L$ sensors lying on the same plane. Therefore, the full row rank condition on $P$ is satisfied if and if only not all sensors lie on a plane, this also implies that at least four tri-axis accelerometers are required for the proposed method.

Note that the gravity vector estimate given in the partitioned estimation lemma is optimal under the assumption that $P$ has full row rank. These results can be extended and the rank condition on $P$ can be relaxed when one only seeks only the gravity vector. For example, one could directly measure gravity with a single tri-axis accelerometer at the pivot, where the dynamic terms do not enter the measurements.However, this is not possible for the balancing unicycle application.

Tilt Estimation

With the estimate $^B\hat{g}$ of the gravity vector in the body frame, one can use equation (5) to estimate the body rotation, since the direction of the gravity vector in the inertial frame is known. In this project, the attitude of the rigid body is represented by $z$-$y$-$x$-Euler angles (yaw, pitch, roll), i.e. the body frame $\hat{B}$ is obtained by rotating the inertial frame $\hat{O}$ succesively about its $z$-axis, then the resulting $y$- and $x$-axis,

$^{O}_{B}R = R_z(\alpha) \ R_y(\beta) \ R_x(\gamma)$, (Equation 23)

$\left\{ \begin{array}{l} R_z(\alpha) := \begin{bmatrix} cos(\alpha) & -sin(\alpha) & 0 \\ sin(\alpha) & cos(\alpha) & 0 \\ 0 & 0 & 1 \end{bmatrix} &\\ R_y(\beta) := \begin{bmatrix} cos(\beta) & 0 & sin(\beta) \\ 0 & 1 & 0 \\ -sin(\beta) & 0 & cos(\beta) \end{bmatrix} &\\ R_x(\gamma) := \begin{bmatrix} 1 & 0 & 0 \\ 0 & cos(\gamma) & -sin(\gamma) \\ 0 & sin(\gamma) & cos(\gamma) \end{bmatrix} \end{array} \right.$,

where $\alpha$, $\beta$ and $\gamma$ are the yaw, pitch and roll Euler angles, respectively. With this representation, the tilt of the rigid body is captured by $\beta$ and $\gamma$. Using equation (23), equation (5) can be written as

$^{B}g = ^{O}_{B}R^T \ ^{O}g = R^T_x(\gamma) \ R^T_y(\beta) \ R^T_z(\alpha) \ ^{O}g$. (Equation 24)

Using $^{O}g = \begin{bmatrix} 0 & 0 & g_0 \end{bmatrix}^T$ with gravity constant $g_0$ and the definitions of the rotation matrices, equation (24) simplifies to

$^{B}g = R^T_x(\gamma) \ R^T_y(\beta) \ ^{O}g = g_0 \begin{bmatrix} -sin(\beta) \\ sin(\gamma) cos(\beta) \\ cos(\gamma) cos(\beta) \end{bmatrix}$. (Equation 25)

It follows that the $z$-Euler angle $\alpha$ is not observable from the accelerometer measurements.

Given the estimate of the gravity vector in equation (20), the accelerometric estimates for the $y$- and $x$-Euler angles at time $k$ are:

$\left\{ \begin{array}{l} \hat{\beta}_a(k) = atan2(-^{B}\hat{g}_x(k), \sqrt{^{B}\hat{g}_y^2(k) + ^{B}\hat{g}_z^2(k)}) &\\ \hat{\gamma}_a(k) = atan2(^{B}\hat{g}_y(k), ^{B}\hat{g}_z(k)) \end{array} \right.$, (Equation 26)

where $atan2$ is the four-quadrant inverse tangent. Note that one does not need to know the gravity constant $g_0$ for estimating tilt.

The variance of the angle estimates can be obtained from the variance of the gravity vector estimate, which can in turn be calculated from equation (18) and the SVD of $P$ in equation (16).

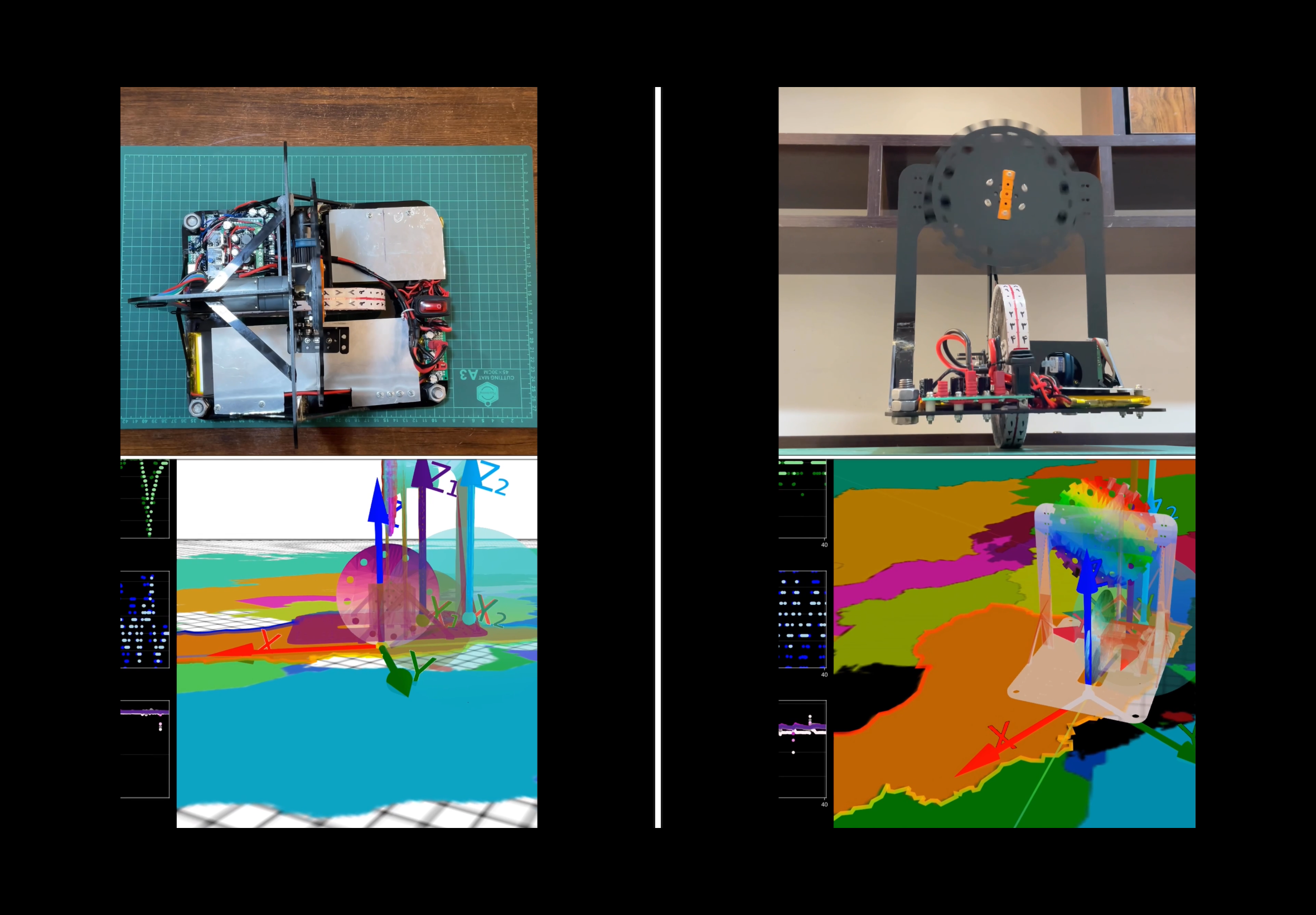

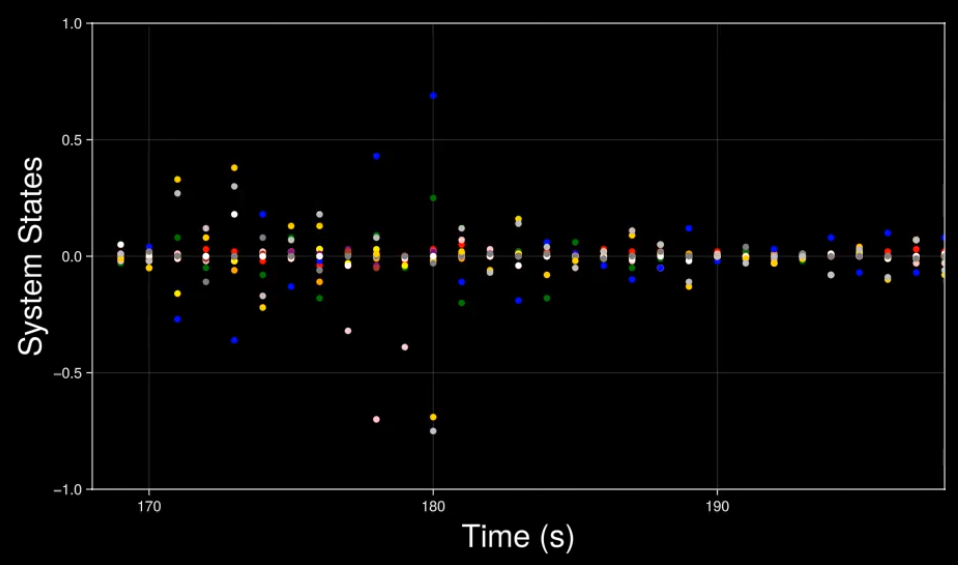

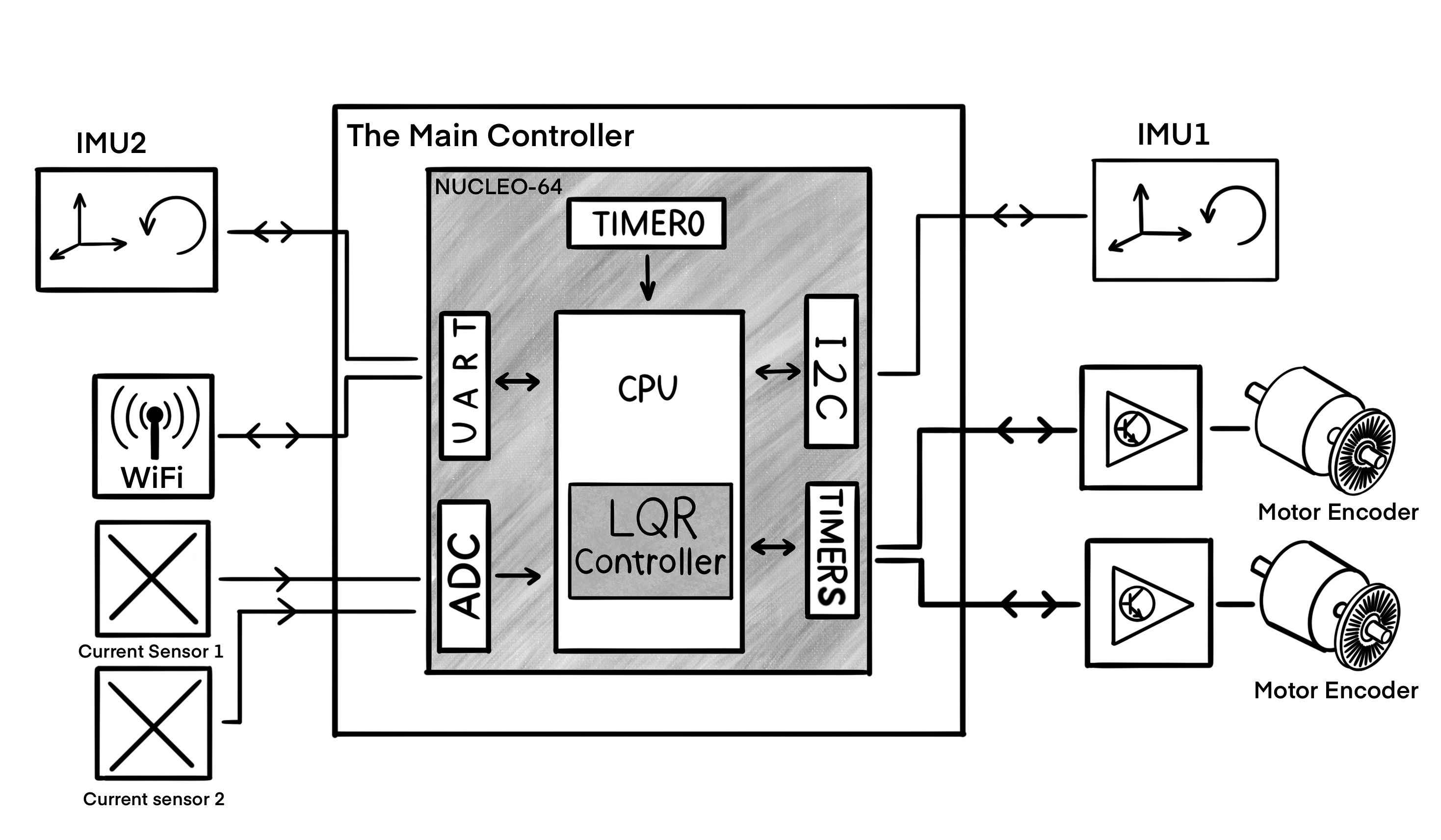

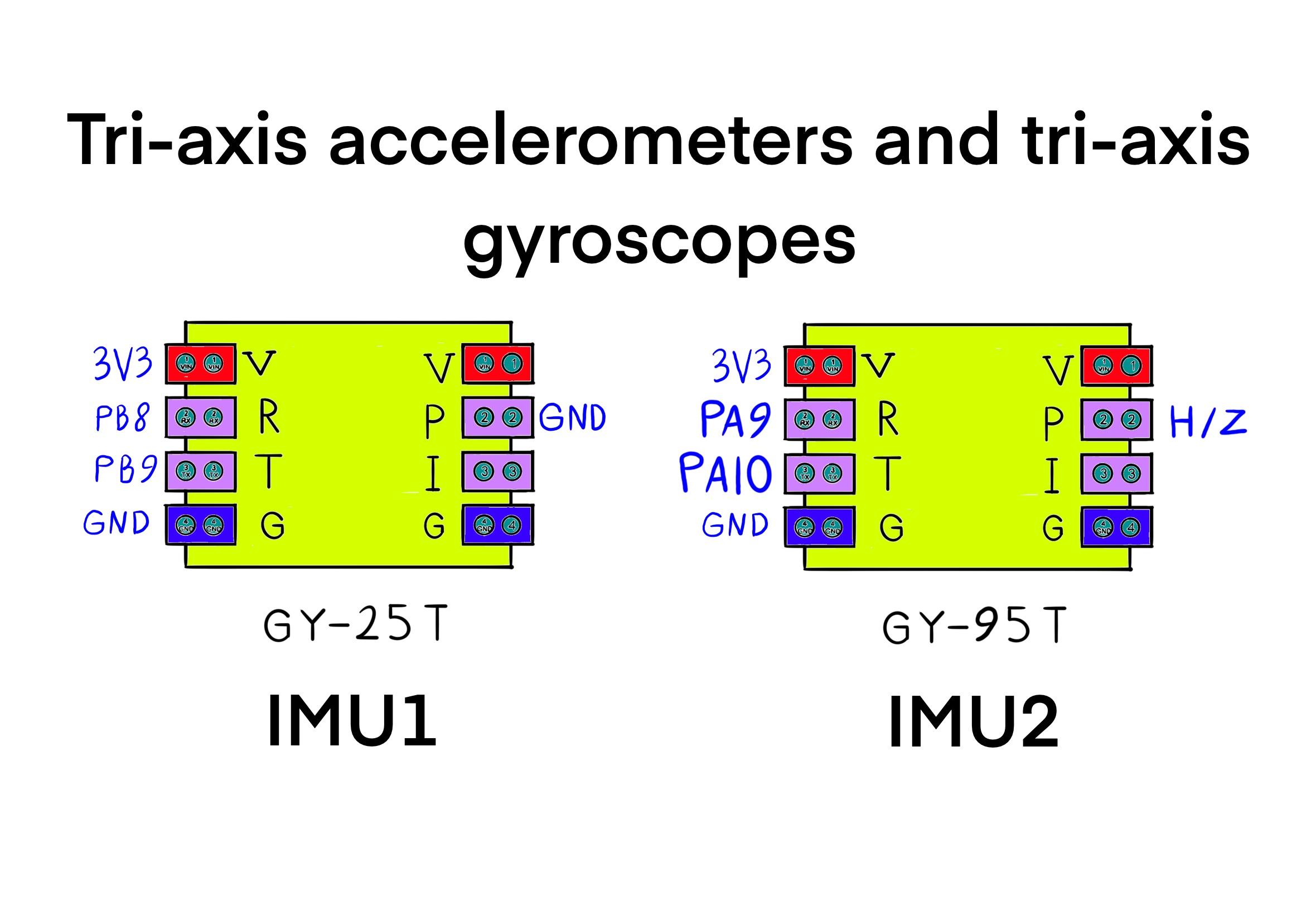

The Application of Tilt Estimation to the Balancing Unicycle

The tilt estimation algorithm is applied to the balancing unicycle robot. The passive structure of the unicycle is balanced on a wheel. There is a reaction wheel that is mounted on top of the rolling wheel, generating torque in the perpendicular direction for keeping the robot in equilibrium. The pitch and roll angles of the robot are estimated from measurements of two inertial measurement units (IMUs) with tri-axis accelerometers and rate gyros using the algorithm presented in the section above. The noise level of the estimate is further reduced by straightforward fusion with data from the rate gyros. Experimental results are provided in the next section.

The balancing unicycle robot consists of a rigid body in the shape of a rectangular cuboid with two orthogonally attached rotors. The objective is to balance the robot on the bottom wheel. In this configuration, the rigid body has three rotational degrees of freedom, and one translational degree of freedom. One can assume that the robot pivot does not slip due to friction, but rolls freely along a straight line. On the robot chassis, a pair of IMUs are mounted that measure accelerations and angular velocities each along three axes.

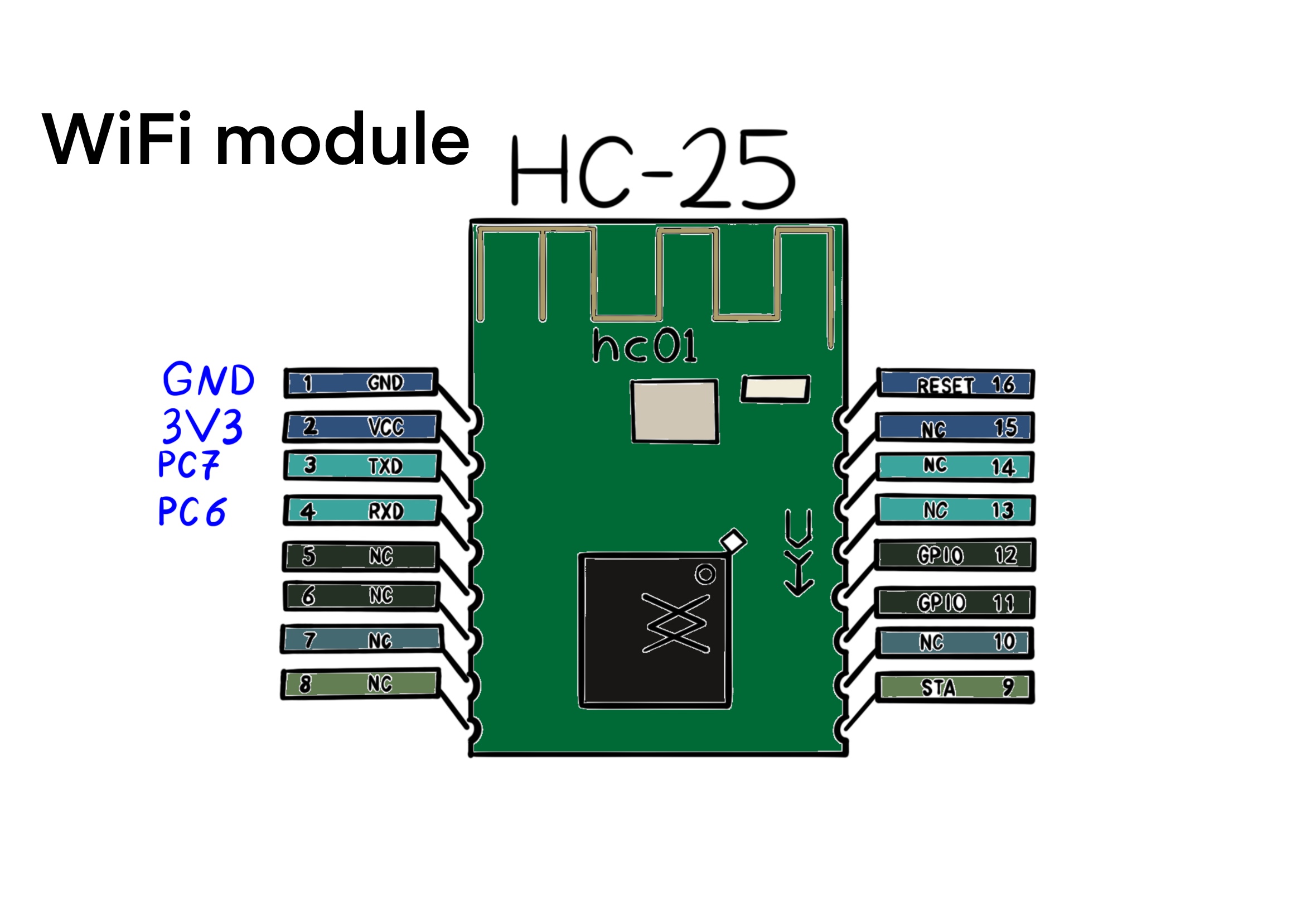

The wheels are actuated by DC motors and rotate relative to the robot structure. When the wheels rotate, they exert inertia reactional and ground reactional forces (by generating angular momenta and towing force) on the robot structure. An absolute encoder is used on each motor to measure the angle of a wheel relative to its mounting axle. Furthermore, the robot carries its own power unit and a computer that is connected to the sensors and the DC motors. The computer is also connected to a WiFi module to broadcast all its local sensor measurements to the terminal of a workstation.

A state feedback controller is designed that stabalizes the unstable equilibrium of an upright standing robot. Estimates for the chassis angles are obtained from the tilt estimation algorithm of the section above. Although the details of the controller design are explained in the next section, a brief explanation of the control system follows.

Foe both wheels, an inner feedback loop is closed for the chassis velocity. The inner-loop controller, which is the critic loop in the Actor/Critic architecture, ensures that velocity commands are tracked at a faster rate than the natural dynamics of the chassis. This way, one can neglect nonlinear effects such as friction and backlash in the actuation mechanism. A linear dynamical model (a linear quadratic regulator) about the equilibrium that takes into account the effect of the inner loop is obtained using a two-timescale technique, where the outer loop updates the actor of the controller. Updating the control policy in the outer-loop results in a stabalizing controller.

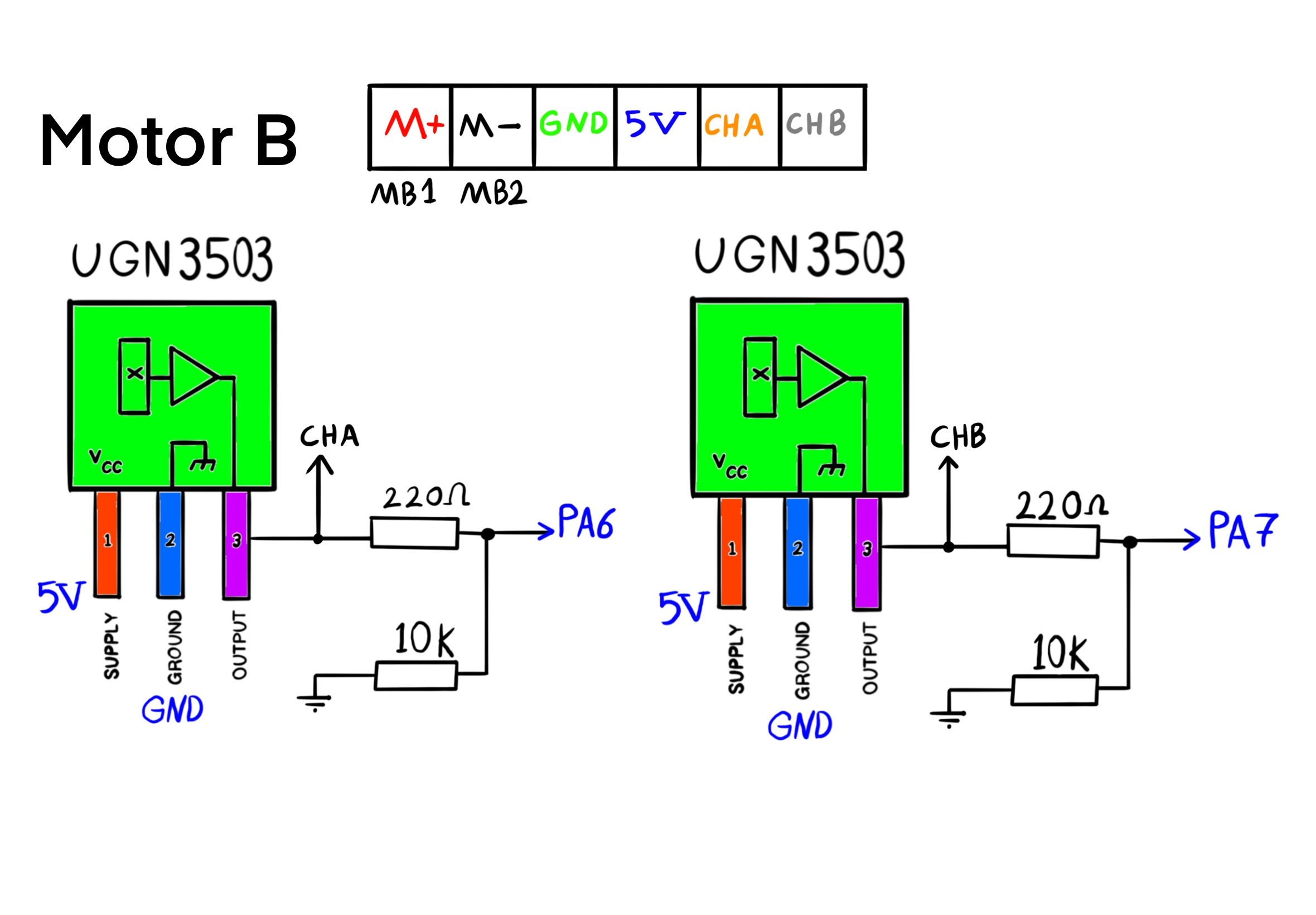

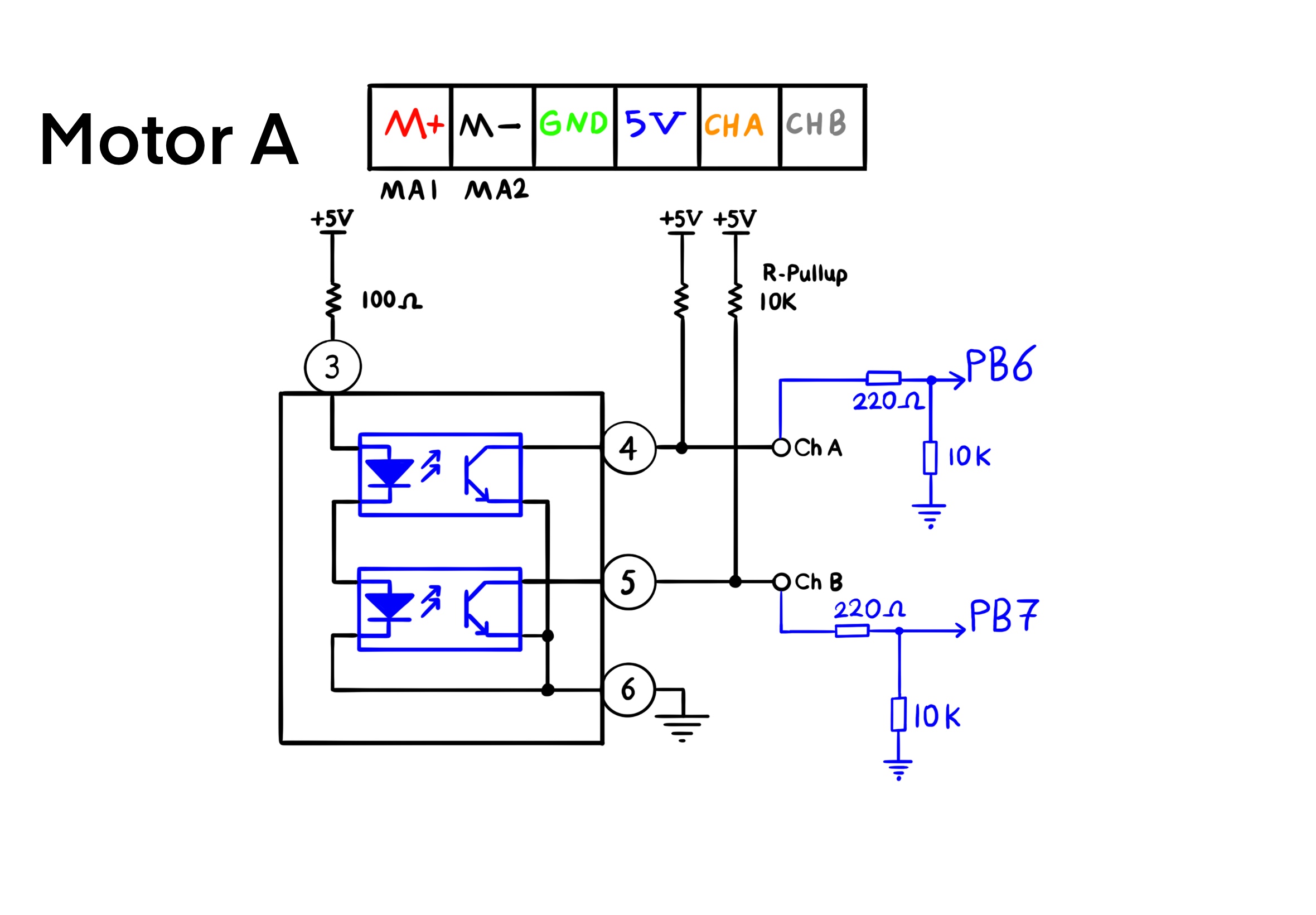

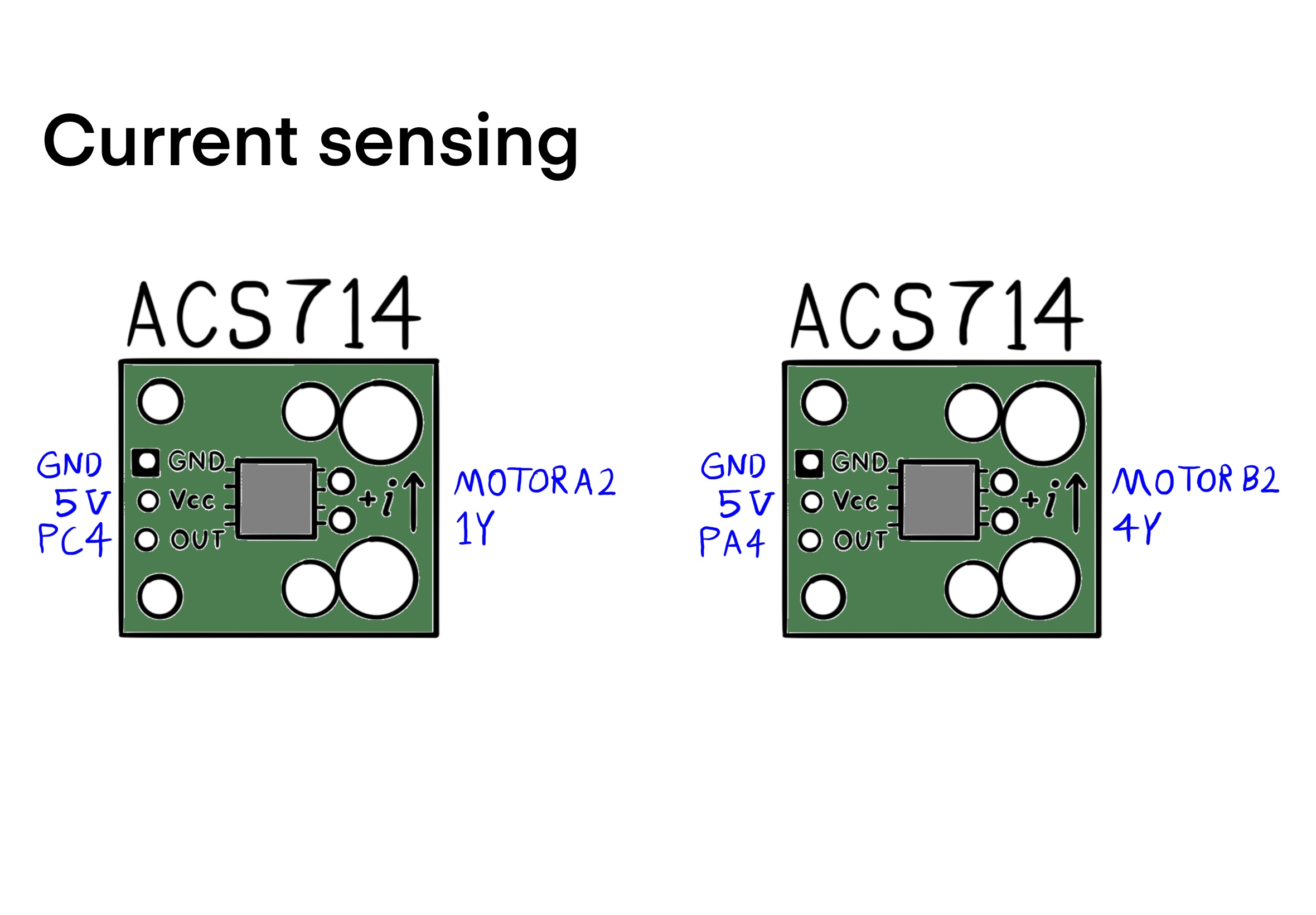

The sensors can generate estimates of all system states: the wheels' angular velocities are calculated using measurements by encoders, estimates of the motors' electrical current rates result from a difference equation using measurements by Hall effect sensors, and estimates of the robot's pitch and roll angles and their rates and accelerations are derived from the IMU measurements. Note that for balancing, knowledge of yaw is not required. Hence, a centralized full-state feedback LQR controller can be designed for stabalizing the system.

The Implemetation of the Tilt Estimation Algorithm

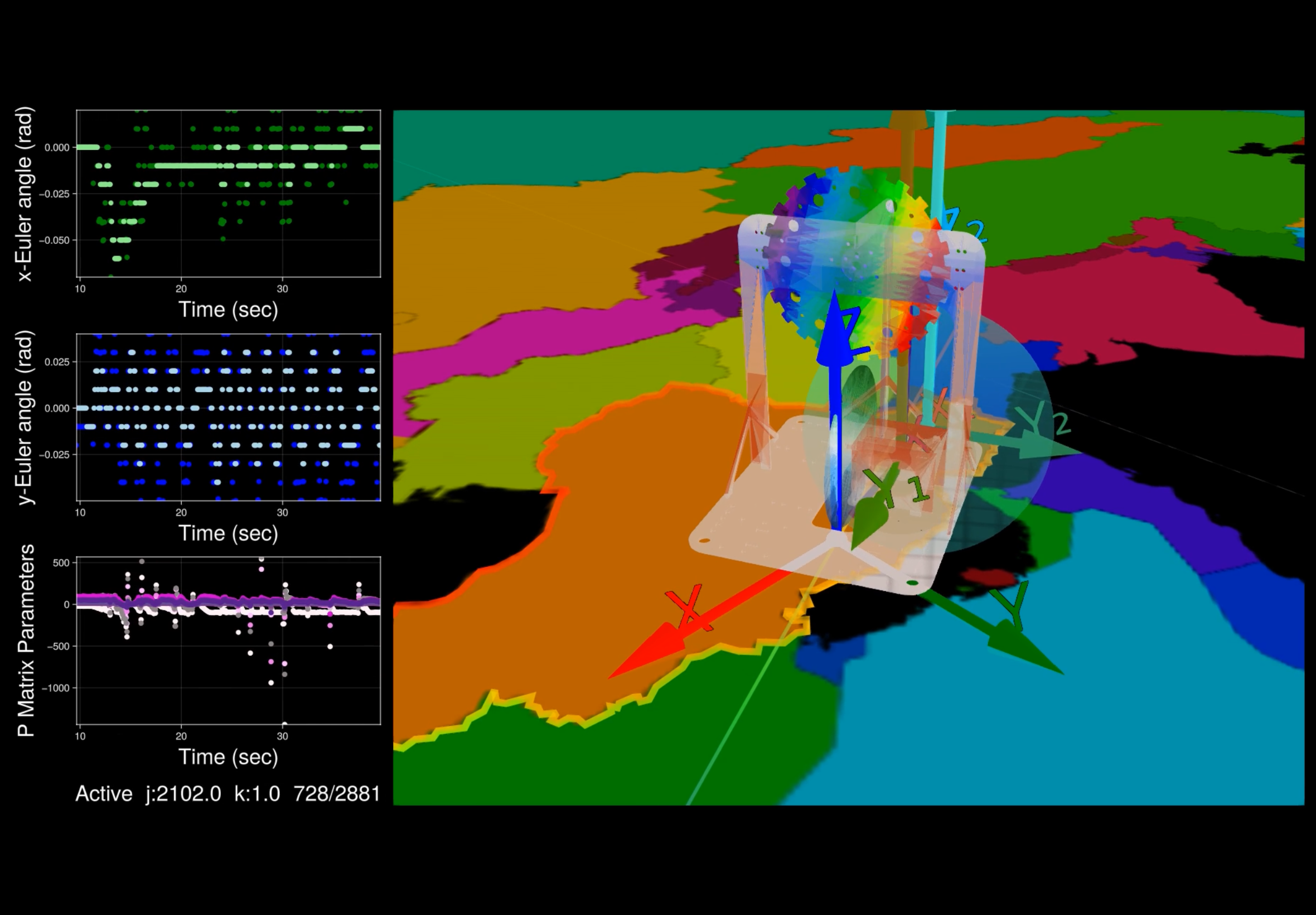

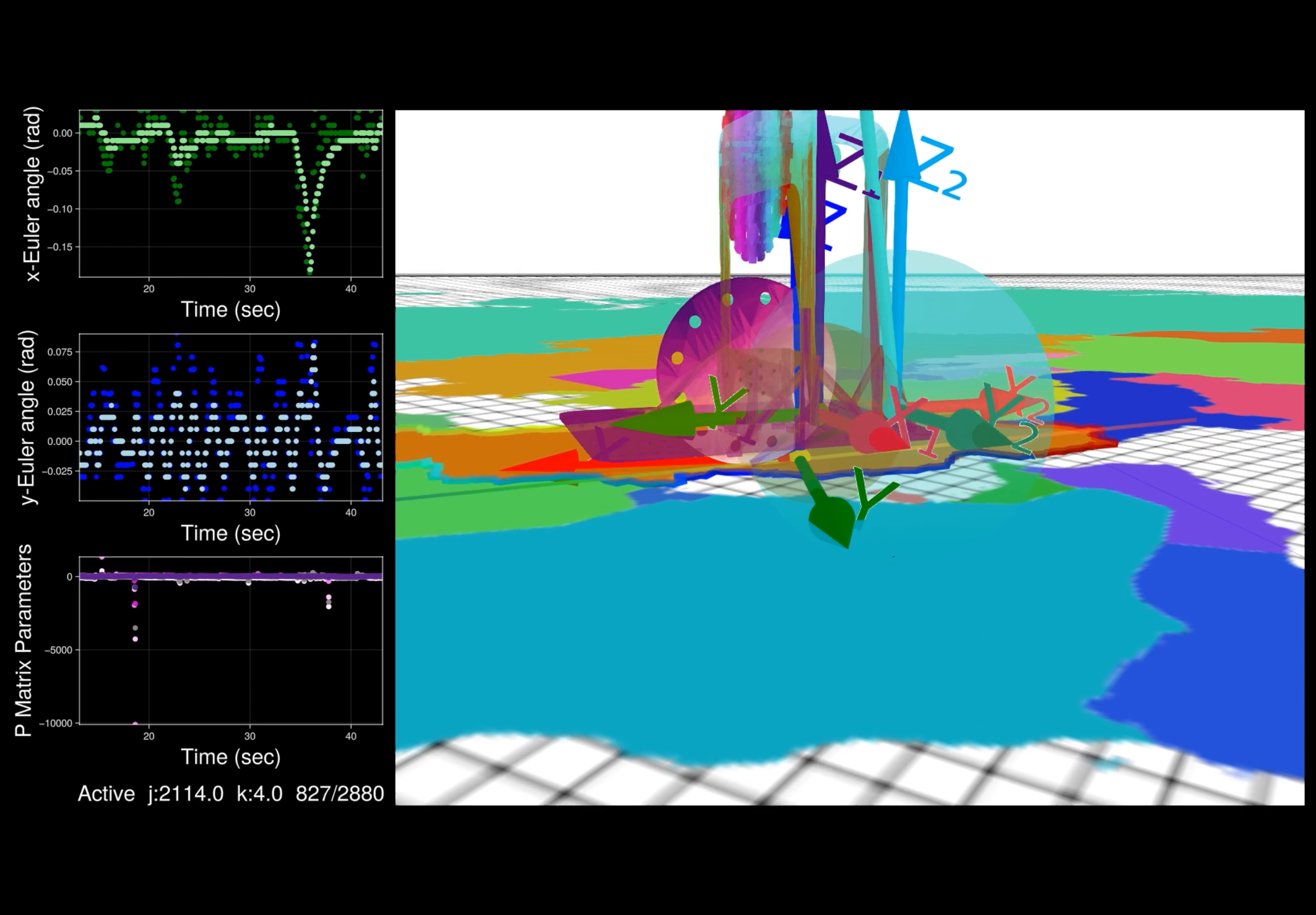

The coordinate frame definitions and the locations of the two IMUs and the pivot point on the robot's chassis are indicated in the figure below.

The position vectors of the sensors are

$^Op_1 = \begin{bmatrix} -0.140 & -0.065 & -0.062 \end{bmatrix}^T$ and

$^Op_2 = \begin{bmatrix} -0.205 & -0.055 & -0.06 \end{bmatrix}^T$.

Also the position of the pivot point in the inertial coordinate frame is

$^Opivot = \begin{bmatrix} -0.097 & -0.1 & -0.107 \end{bmatrix}^T$

With this data, matrix $P$ can be constructed as in equation (10).

$P \in \mathbb{R}^{4 \times L}$

$L = 2$

$P \in \mathbb{R}^{4 \times 2}$

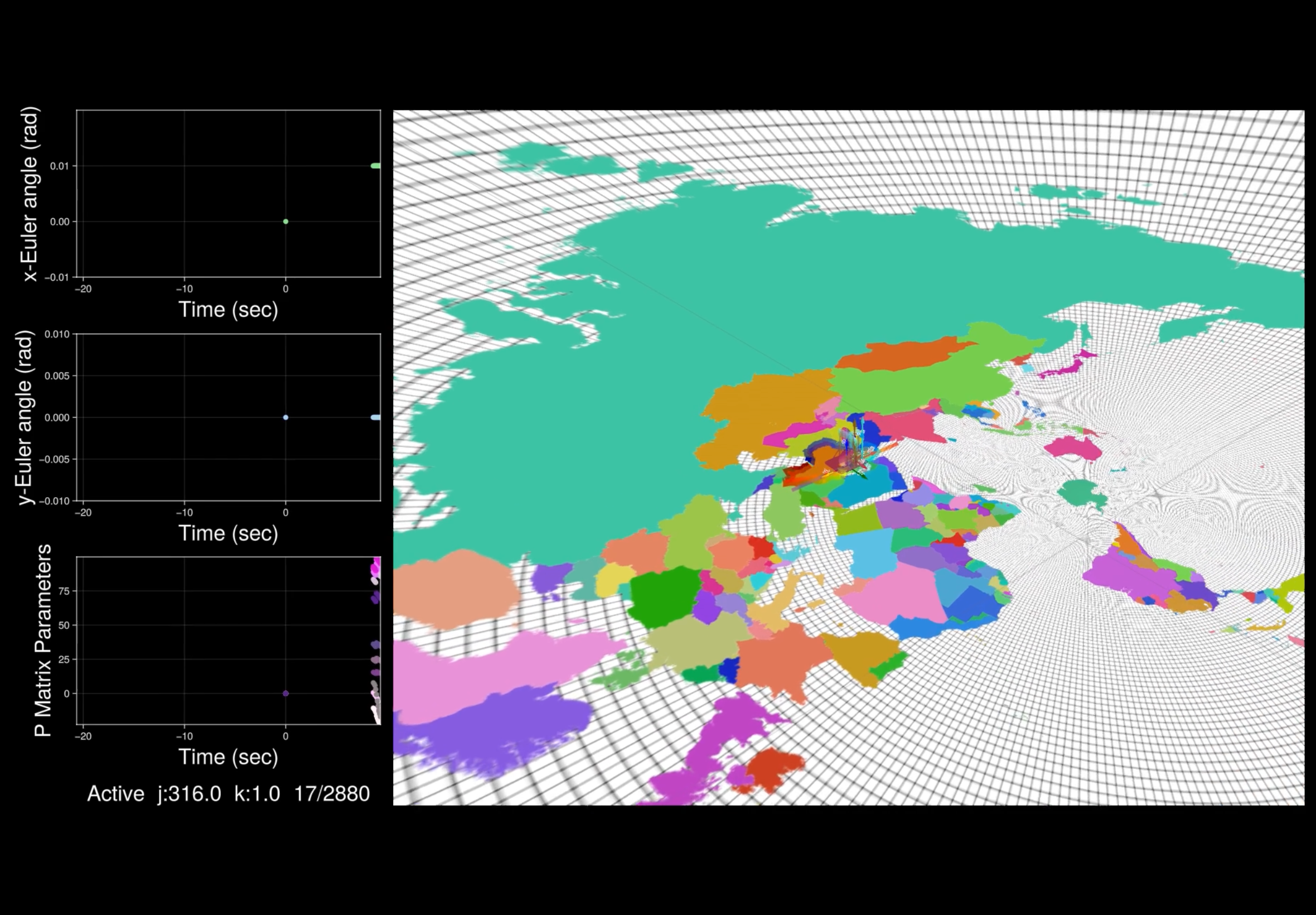

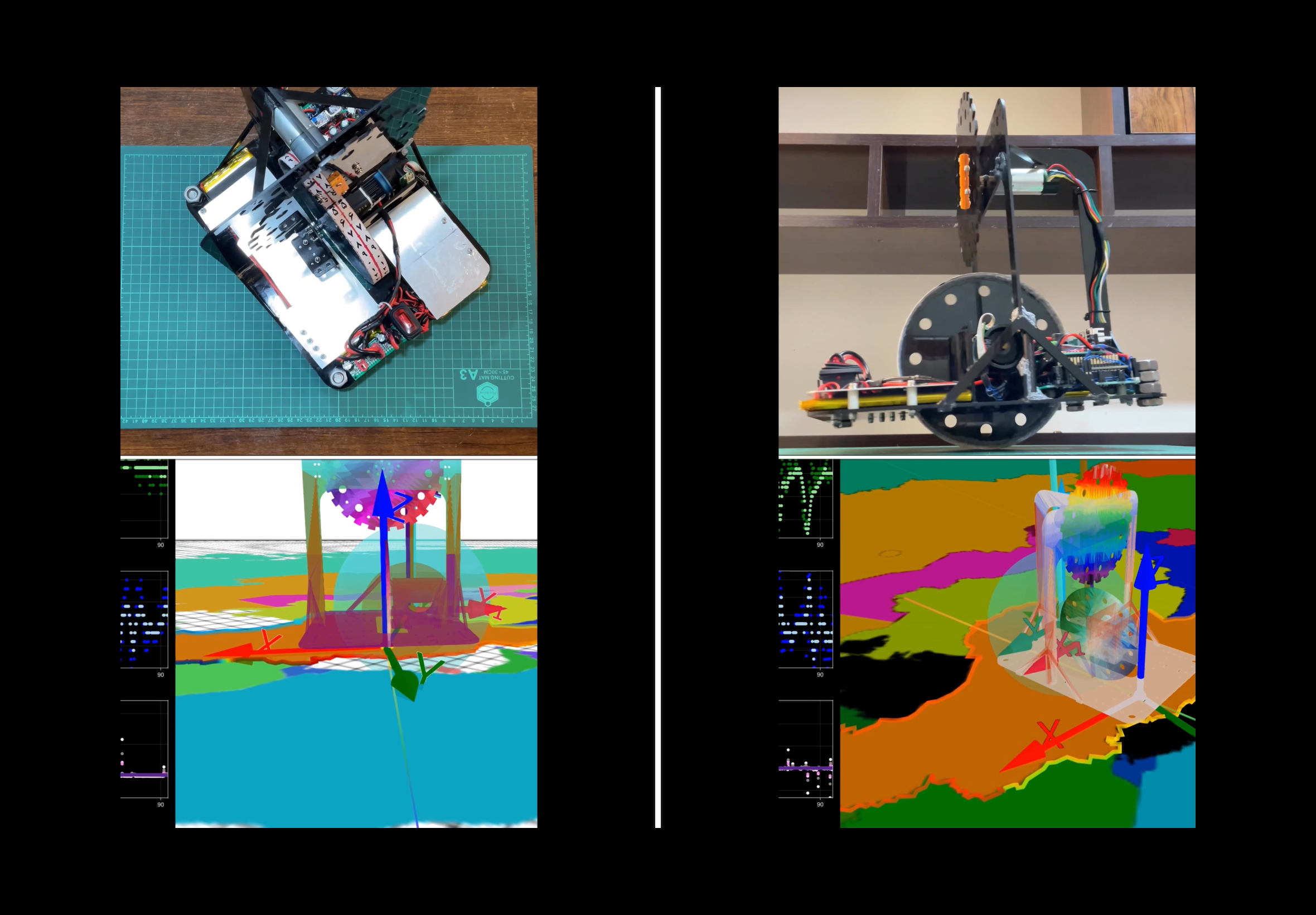

the unicycle graphical dashboard for taking the measurements

$^Op_1 = \begin{bmatrix} p_{1x} \\ p_{1y} \\ p_{1z} \end{bmatrix}$

$^Op_2 = \begin{bmatrix} p_{2x} \\ p_{2y} \\ p_{2z} \end{bmatrix}$

$^Opivot = \begin{bmatrix} pivot_x \\ pivot_y \\ pivot_z \end{bmatrix}$

$P = \begin{bmatrix} 1 & 1 \\ p_{1x} - pivot_x & p_{2x} - pivot_x \\ p_{1y} - pivot_y & p_{2y} - pivot_y \\ p_{1z} - pivot_z & p_{2z} - pivot_z \end{bmatrix}$

Applying the partitioned estimation lemma yields the optimal fusion matrix for estimating the gravity vector in the robot's body frame,

$X = P^T (P P^T)^{-1} \in \mathbb{R}^{L \times 4} \longrightarrow X \in \mathbb{R}^{2 \times 4}$,

$X_1^* = \begin{bmatrix} 1.568 & 15.28 & 20.80 & 8.028 \\ -0.639 & -13.65 & 36.04 & -2.785 \end{bmatrix}$.

In the implementation of the algorithm, all accelerometer measurements are rotated to the body frame and stacked into the matrix $M(k)$ as in equation (8) at each time step. Then, equations (20) and (26) are implemented to obtain the accelerometric estimates for the pitch and roll angles, $\hat{\beta}_a(k)$ and $\hat{\gamma}_a(k)$.

In order to reduce the noise level of the accelerometer-based estimates, a straightforward scheme for data fusion with the tri-axis rate gyro measurements may be used. Let $r(k) \in \mathbb{R}^3$ denote the body angular rate at time $k$, which is directly measured by a gyro that is mounted on the body. Thus, an estimate $\hat{r}(k)$ of this quantity may be obtained by averaging the measurements of all two gyros. The body rates are transformed to Euler angular rates by

$\begin{bmatrix} \hat{\dot{\alpha}}(k) \\ \hat{\dot{\beta}}(k) \\ \hat{\dot{\gamma}}(k) \end{bmatrix} = \begin{bmatrix} 0 & sin(\hat{\gamma}) / cos(\hat{\beta}) & cos(\hat{\gamma}) / cos(\hat{\beta}) \\ 0 & cos(\hat{\gamma}) & -sin(\hat{\gamma}) \\ 1 & sin(\hat{\gamma}) tan(\hat{\beta}) & cos(\hat{\gamma}) tan(\hat{\beta}) \end{bmatrix} \hat{r}(k)$, (Equation 27)

which requires estimates of the Euler angles $\hat{\beta}$ and $\hat{\gamma}$. For a straightforward implementation, the most recent estimate may be used as an approximation, i.e. $\hat{\beta} = \hat{\beta}(k - 1)$ and $\hat{\gamma} = \hat{\gamma}(k - 1)$.

Integrating the rate estimates in equation (27) yields estimates for the Euler angles that are based on the rate gyro measurements. Thus, the accelerometer- and gyro-based estimates can be fused to obtain a better overall estimate of the robot pitch and roll angles,

$\left\{ \begin{array}{l} \hat{\beta}(k) = \kappa_1 \hat{\beta}_a(k) + (1 - \kappa_1) (\hat{\beta}(k - 1) + T \hat{\dot{\beta}}(k)) &\\ \hat{\gamma}(k) = \kappa_2 \hat{\gamma}_a(k) + (1 - \kappa_2) (\hat{\gamma}(k - 1) + T \hat{\dot{\gamma}}(k)) \end{array} \right.$, (Equation 28)

where $T$ is the sampling time (the same as dt in the LQR struct) and $\kappa_1$ and $\kappa_2$ are tuning parameters that may be chosen such that the variance of the estimate is minimized given the noise specifications of accelerometers and rate gyros. For the application presented in this project, $\kappa_1 = \kappa_2 = 0.05$ was used.

The Experimental Results

The accelerometer-based tilt estimator was implemented on the Balancing Unicycle as described above. In the Balancing Unicycle application, the bias of the Euler angle estimates is corrected prior to operation in a calibration procedure, which accounts to first order for the accelerometer biases (see the struct fields accXOffset, accYOffset and accZOffset).

typedef struct

{

int16_t accXOffset;

int16_t accYOffset;

int16_t accZOffset;

float accXScale;

float accYScale;

float accZScale;

int16_t gyrXOffset;

int16_t gyrYOffset;

int16_t gyrZOffset;

float gyrXScale;

float gyrYScale;

float gyrZScale;

int16_t rawAccX;

int16_t rawAccY;

int16_t rawAccZ;

int16_t rawGyrX;

int16_t rawGyrY;

int16_t rawGyrZ;

float accX;

float accY;

float accZ;

float gyrX;

float gyrY;

float gyrZ;

float roll;

float pitch;

float yaw;

float roll_velocity;

float pitch_velocity;

float yaw_velocity;

float roll_acceleration;

float pitch_acceleration;

float yaw_acceleration;

Mat3 B_A_R; // The rotation of the local frame of the sensor i to the robot frame B̂

Vec3 R; // accelerometer sensor measurements in the local frame of the sensors

Vec3 _R; // accelerometer sensor measurements in the robot body frame

Vec3 G; // gyro sensor measurements in the local frame of the sensors

Vec3 _G; // gyro sensor measurements in the robot body frame

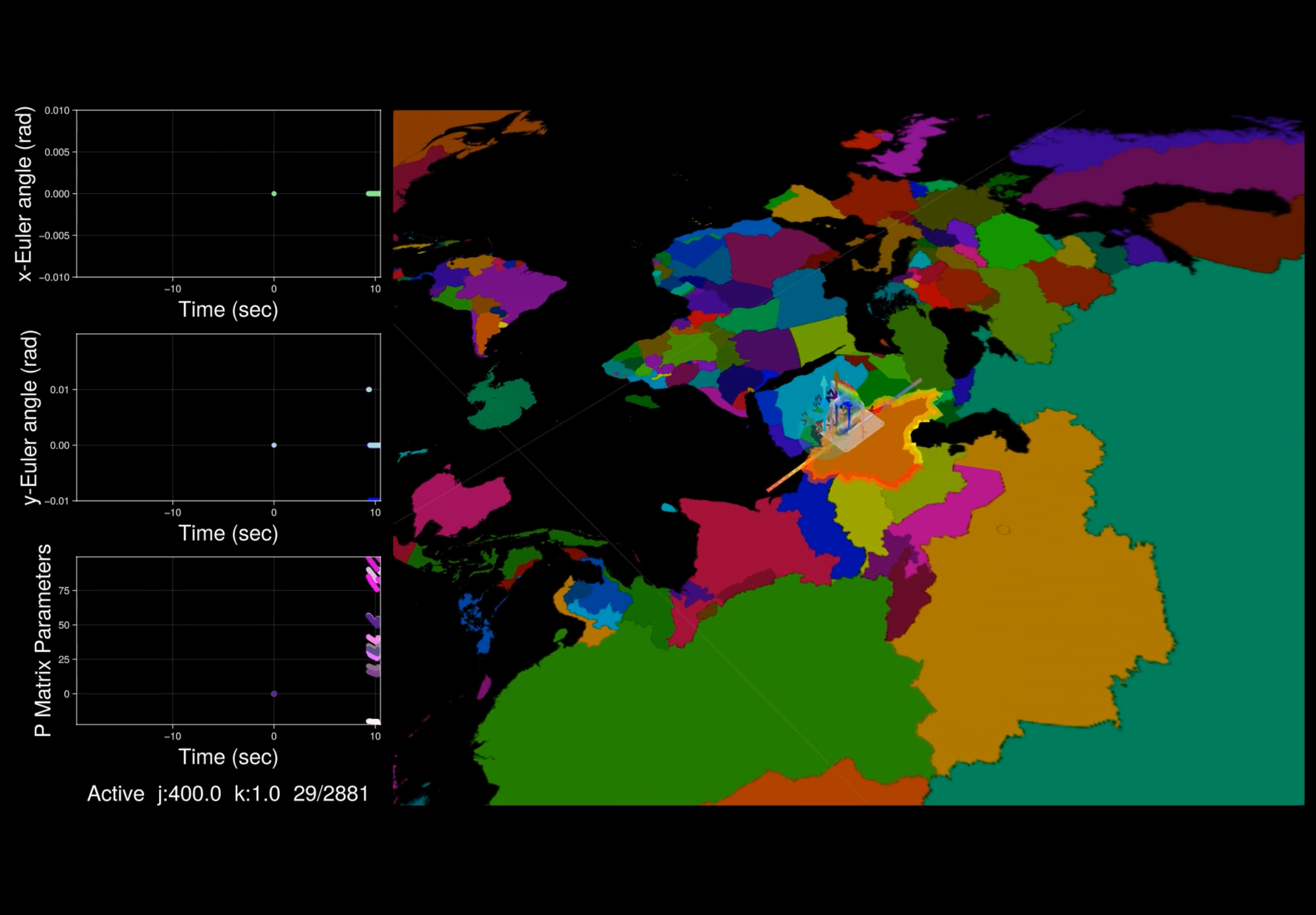

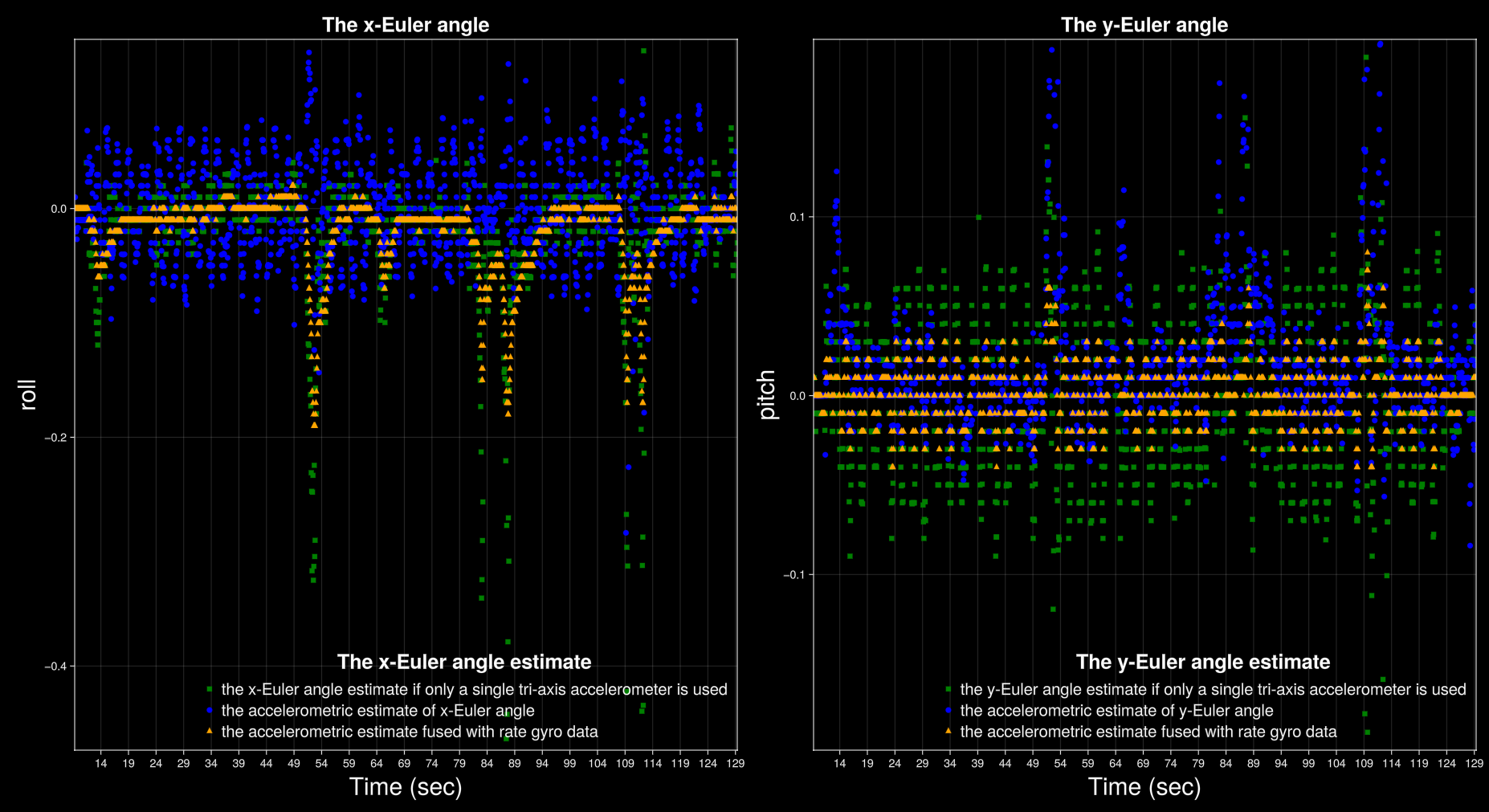

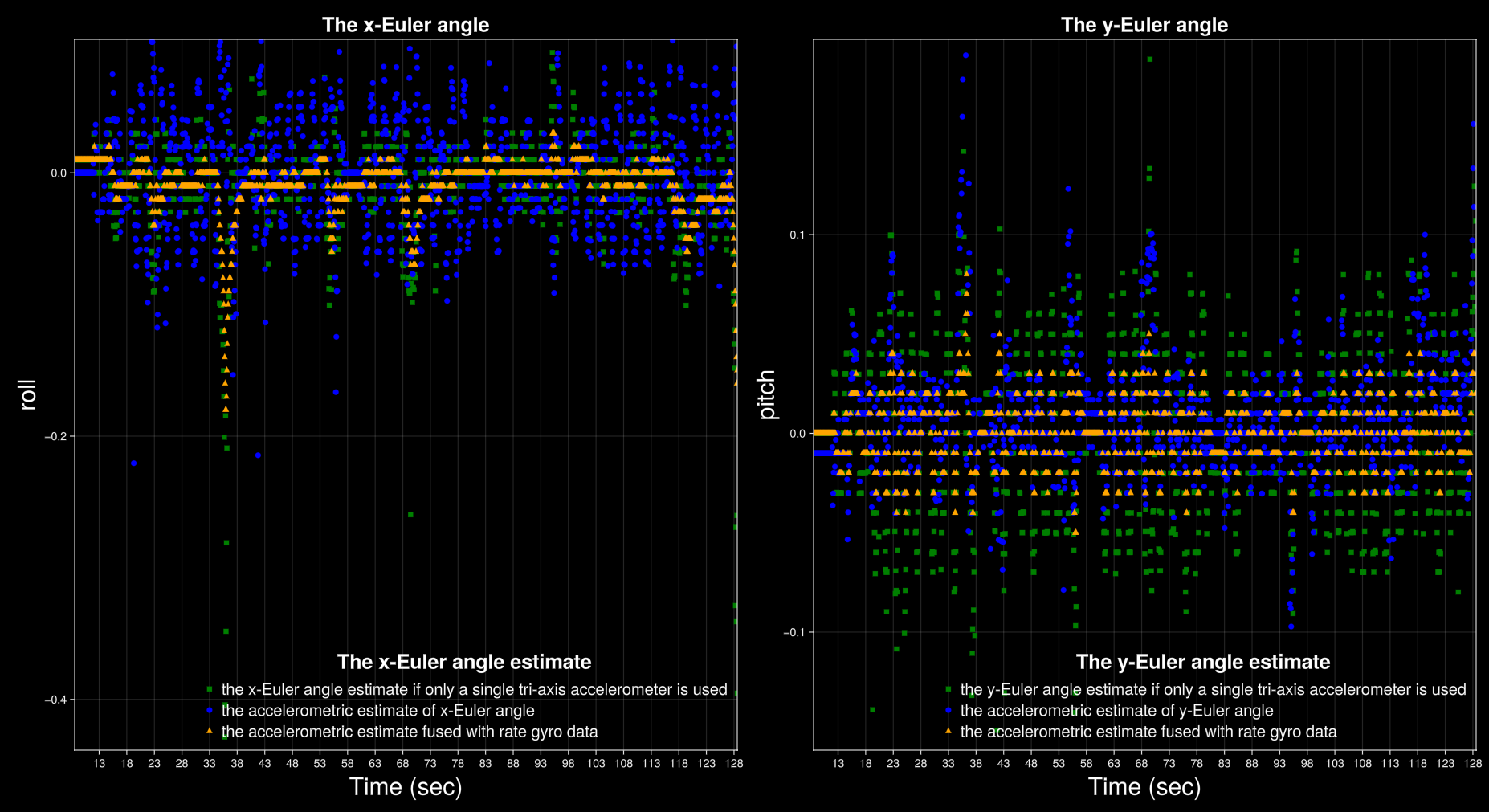

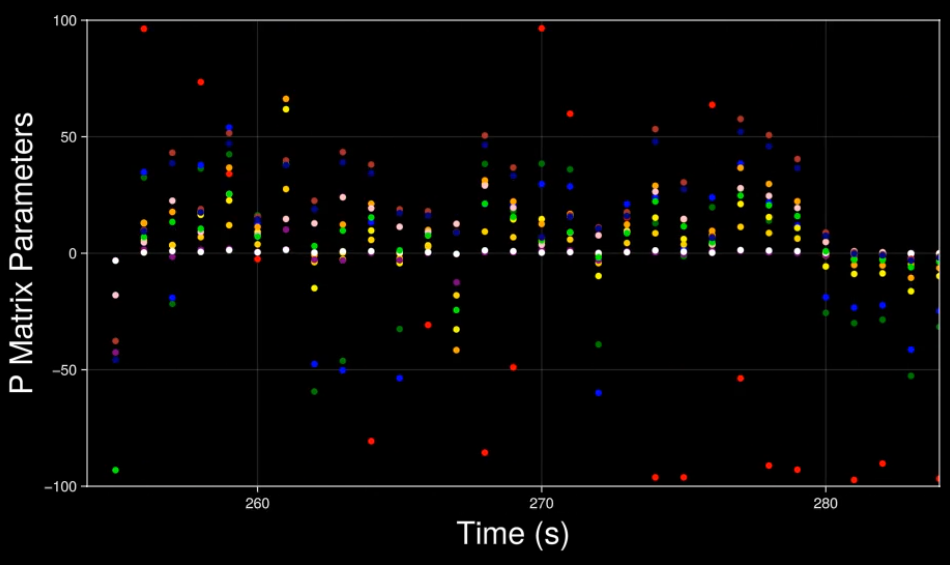

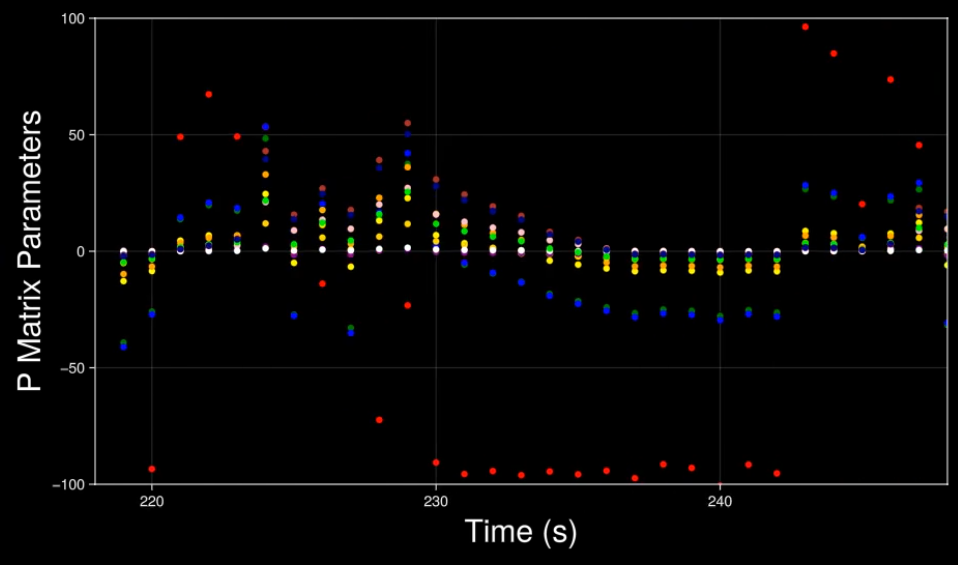

} IMU;To demonstrate the accelerometer-based tilt estimator, the robot was put on the rolling wheel and moved by hand (and also by the LQR controller) about the upright equilibrium position, which corresponds to the Euler angles $\gamma \approx 0$ and $\beta \approx 0$. The results are shown in the following figures. Clearly, the accelerometric estimate is accurate both for slow and fast motion of the robot. In contrast to the tilt estimation method, the same experiment is shown, but now only a single tri-axis accelerometer (sensor i = 1) is used to observe the gravity vector. When the robot is static (away from peaks and valleys), the estimate is accurate. However, when the robot is being moved the estimate suffers from the dynamic terms that act as disturbances to the static estimator. This demonstrates that the dynamics are not negligible. For the closed-loop operation of the system, i.e. for balancing the robot using the LQR model, the improved estimate for the robot angles (up triangles in orange) using both accelerometer and rate gyro data was used.

Estimation data during balancing of the robot: comparison of Euler angle estimates if only a single tri-axis accelerometer is used (green) to the accelerometric estimate of x- and y-Euler angles (blue), and to the accelerometric estimate fused with rate gyro data (orange).

Data sample 1:

Data sample 2:

The Micro-Controller Program

The function updateIMU provides the main source of data for the objective of the system. Through this function, the Micro-Controller Unit (MCU) talks to the Inertial Measurement Unit (IMU) modules 1 and 2 for updating the roll and pitch angles along with their first and second derivatives. This is done by calling the function and giving it a pointer to the Linear Quadratic Regulator (LQR) model object. Although both IMUs are used for tilt estimation, the final result is assigned to the field of IMU 1. This function encapsulates matrix-vector multiplications for coordinate transformations, the singular value decomposition for obtaining the gravity vector, and sensor fusion between the tri-axis accelerometers and the tri-axis gyroscopes. Knowing about the position and orientation of each IMU with respect to the body, the function excludes linear accelerations from calculations. So, UpdateIMU gathers the latest inertial measurements form multiple sensor units and computes the roll and pitch angles using known parameters of the system configuration.

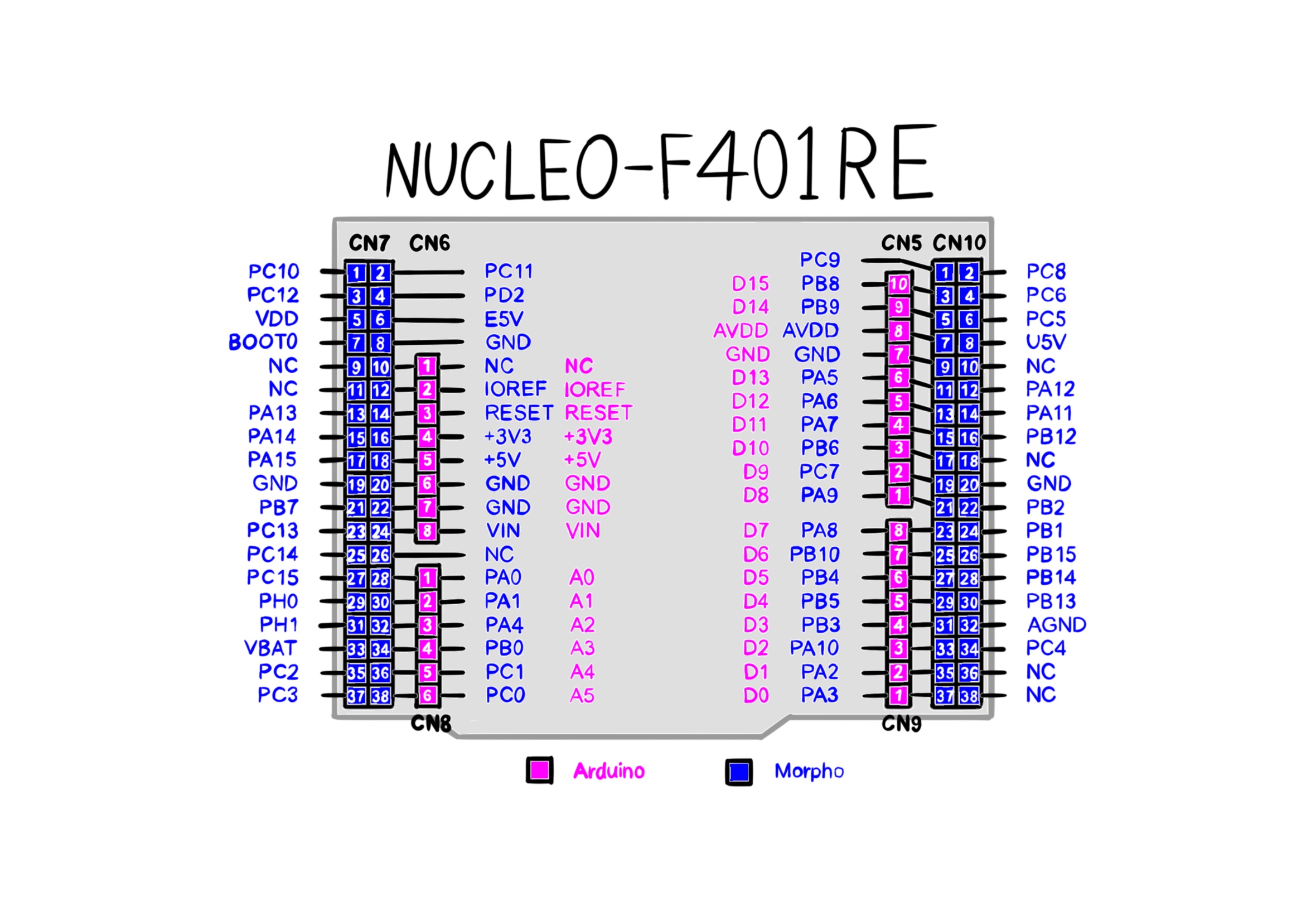

In terms of connectivity, The MCU peripheral USART1 is used to talk to IMU #2 (GY-95T). Set the baudrate of uart1 to 115200 Bits/s for the GY-95 IMU module. Set the Pin6 (PS: IIC/USART output mode selection) of IMU #2 (GY-25T) to zero, in order to use the I2C protocol. The I2C clock speed is set at 100000 Hz in the stanard mode. For saving MCU clock cycles and time, added a DMA request with USART1_RX and DMA2 Stream 2 from peripheral to memory and low priority. The mode is circular and the request call is made once in the main function by passing the usart1 handle and the receive buffer. The request increments the address of memory. The data width is one Byte for both the preipheral and memory.

void updateIMU(LinearQuadraticRegulator *model)

{

updateIMU1(&(model->imu1));

updateIMU2(&(model->imu2));

setIndexVec3(&(model->imu1.R), 0, model->imu1.accX);

setIndexVec3(&(model->imu1.R), 1, model->imu1.accY);

setIndexVec3(&(model->imu1.R), 2, model->imu1.accZ);

setIndexVec3(&(model->imu2.R), 0, model->imu2.accX);

setIndexVec3(&(model->imu2.R), 1, model->imu2.accY);

setIndexVec3(&(model->imu2.R), 2, model->imu2.accZ);

for (int i = 0; i < 3; i++)

{

setIndexVec3(&(model->imu1._R), i, 0.0);

setIndexVec3(&(model->imu2._R), i, 0.0);

}

for (int i = 0; i < 3; i++)

{

for (int j = 0; j < 3; j++)

{

setIndexVec3(&(model->imu1._R), i, getIndexVec3(model->imu1._R, i) + getIndexMat3(model->imu1.B_A_R, i, j) * getIndexVec3(model->imu1.R, j));

setIndexVec3(&(model->imu2._R), i, getIndexVec3(model->imu2._R, i) + getIndexMat3(model->imu2.B_A_R, i, j) * getIndexVec3(model->imu2.R, j));

}

}

for (int i = 0; i < 3; i++)

{

setIndexMat32(&(model->Matrix), i, 0, getIndexVec3(model->imu1._R, i));

setIndexMat32(&(model->Matrix), i, 1, getIndexVec3(model->imu2._R, i));

}

for (int i = 0; i < 3; i++)

{

for (int j = 0; j < 4; j++)

{

setIndexMat34(&(model->Q), i, j, 0.0);

for (int k = 0; k < 2; k++)

{

setIndexMat34(&(model->Q), i, j, getIndexMat34(model->Q, i, j) + getIndexMat32(model->Matrix, i, k) * getIndexMat24(model->X, k, j));

}

}

}

setIndexVec3(&(model->g), 0, getIndexMat34(model->Q, 0, 0));

setIndexVec3(&(model->g), 1, getIndexMat34(model->Q, 1, 0));

setIndexVec3(&(model->g), 2, getIndexMat34(model->Q, 2, 0));

// setIndexVec3(&(model->g), 0, getIndexVec3(model->imu1.R, 0));

// setIndexVec3(&(model->g), 1, getIndexVec3(model->imu1.R, 1));

// setIndexVec3(&(model->g), 2, getIndexVec3(model->imu1.R, 2));

model->beta = atan2(-getIndexVec3(model->g, 0), sqrt(pow(getIndexVec3(model->g, 1), 2) + pow(getIndexVec3(model->g, 2), 2)));

model->gamma = atan2(getIndexVec3(model->g, 1), getIndexVec3(model->g, 2));

setIndexVec3(&(model->imu1.G), 0, model->imu1.gyrX);

setIndexVec3(&(model->imu1.G), 1, model->imu1.gyrY);

setIndexVec3(&(model->imu1.G), 2, model->imu1.gyrZ);

setIndexVec3(&(model->imu2.G), 0, model->imu2.gyrX);

setIndexVec3(&(model->imu2.G), 1, model->imu2.gyrY);

setIndexVec3(&(model->imu2.G), 2, model->imu2.gyrZ);

for (int i = 0; i < 3; i++)

{

setIndexVec3(&(model->imu1._G), i, 0.0);

setIndexVec3(&(model->imu2._G), i, 0.0);

}

for (int i = 0; i < 3; i++)

{

for (int j = 0; j < 3; j++)

{

setIndexVec3(&(model->imu1._G), i, getIndexVec3(model->imu1._G, i) + getIndexMat3(model->imu1.B_A_R, i, j) * getIndexVec3(model->imu1.G, j));

setIndexVec3(&(model->imu2._G), i, getIndexVec3(model->imu2._G, i) + getIndexMat3(model->imu2.B_A_R, i, j) * getIndexVec3(model->imu2.G, j));

}

}

for (int i = 0; i < 3; i++)

{

setIndexVec3(&(model->r), i, (getIndexVec3(model->imu1._G, i) + getIndexVec3(model->imu2._G, i)) / 2.0);

// setIndexVec3(&(model->r), i, getIndexVec3(model->imu1._G, i));

}

setIndexMat3(&(model->E), 0, 0, 0.0);

setIndexMat3(&(model->E), 0, 1, sin(model->gamma) / cos(model->beta));

setIndexMat3(&(model->E), 0, 2, cos(model->gamma) / cos(model->beta));

setIndexMat3(&(model->E), 1, 0, 0.0);

setIndexMat3(&(model->E), 1, 1, cos(model->gamma));

setIndexMat3(&(model->E), 1, 2, -sin(model->gamma));

setIndexMat3(&(model->E), 2, 0, 1.0);

setIndexMat3(&(model->E), 2, 1, sin(model->gamma) * tan(model->beta));

setIndexMat3(&(model->E), 2, 2, cos(model->gamma) * tan(model->beta));

for (int i = 0; i < 3; i++)

{

setIndexVec3(&(model->rDot), i, 0.0);

}

for (int i = 0; i < 3; i++)

{

for (int j = 0; j < 3; j++)

{

setIndexVec3(&(model->rDot), i, getIndexVec3(model->rDot, i) + getIndexMat3(model->E, i, j) * getIndexVec3(model->r, j));

}

}

float rDot0 = getIndexVec3(model->rDot, 0) / 180.0 * M_PI;

float rDot1 = getIndexVec3(model->rDot, 1) / 180.0 * M_PI;

float rDot2 = -getIndexVec3(model->rDot, 2) / 180.0 * M_PI;

model->fusedBeta = model->kappa1 * model->beta + (1.0 - model->kappa1) * (model->fusedBeta + model->dt * rDot1);

model->fusedGamma = model->kappa2 * (-model->gamma) + (1.0 - model->kappa2) * (model->fusedGamma + model->dt * rDot2);

model->alpha += model->dt * rDot0;

float _roll = model->fusedBeta;

float _pitch = model->fusedGamma;

float _roll_velocity = (rDot1 + (_roll - model->imu1.roll) / model->dt) / 2.0;

float _pitch_velocity = (rDot2 + (_pitch - model->imu1.pitch) / model->dt) / 2.0;

model->imu1.roll_acceleration = _roll_velocity - model->imu1.roll_velocity;

model->imu1.pitch_acceleration = _pitch_velocity - model->imu1.pitch_velocity;

model->imu1.roll_velocity = _roll_velocity;

model->imu1.pitch_velocity = _pitch_velocity;

model->imu1.roll = _roll;

model->imu1.pitch = _pitch;

model->imu1.yaw = model->alpha;

}Stepping Through the Implementation

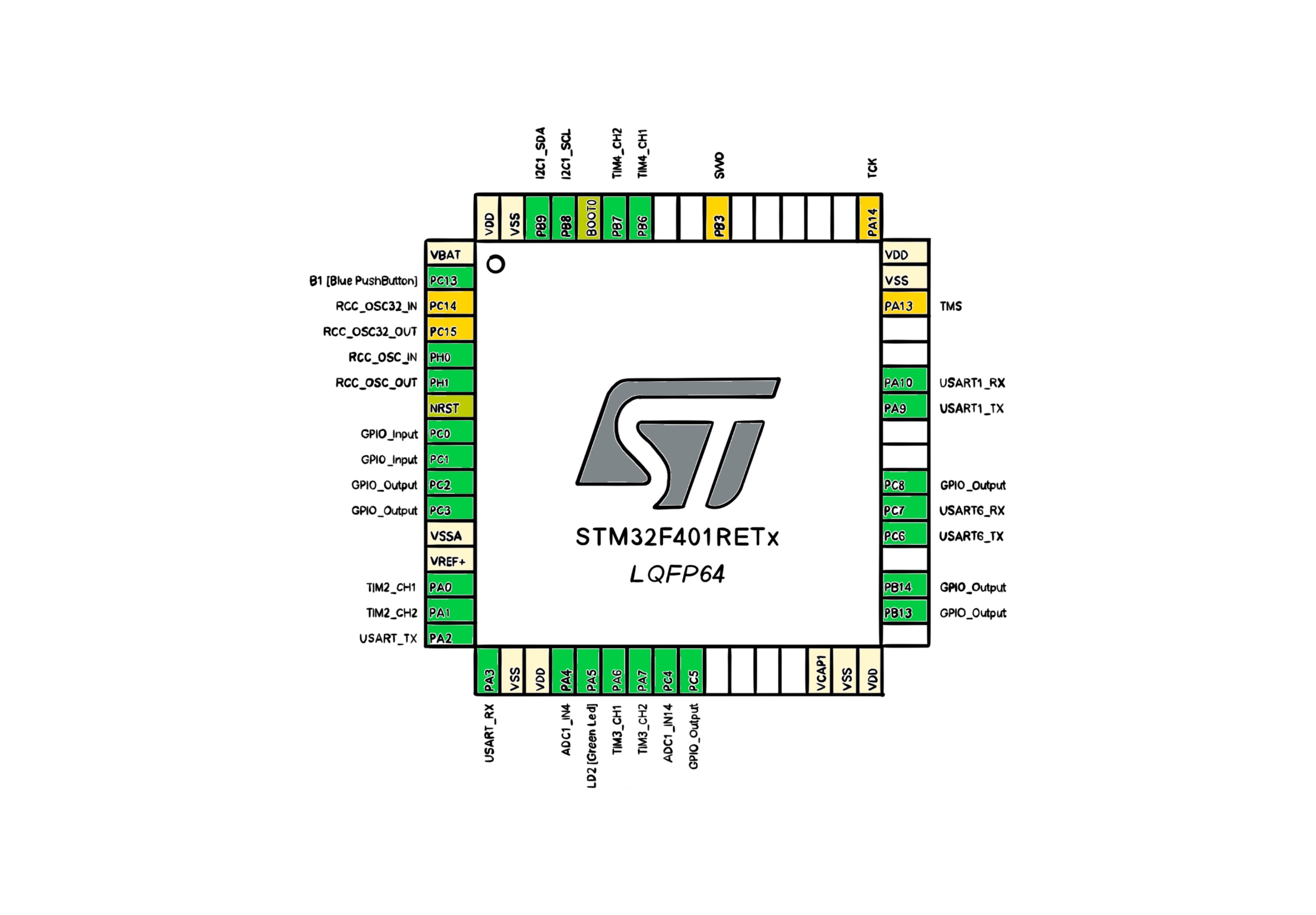

In this section, we step through the implementation of the robot's controller in the order of execution. The controller is implemented in the C programming language. It runs on a STM32F401RE mictocontroller, which is clocked at 84 MHz. Starting from first principles, there are at least two loops in a reinforcement learning program: the actor loop and the critic loop. The critic loop operates at a faster timescale and finds filter coefficients by taking actions, making mesurements and computing a recursive algorithm. In contrast, the actor loop is slower and updates the control policy, which is a function that produces actions. Even though actions are taken in the critic loop, the feedback policy function is the same across multiple runs of the loop. The actor loop is where the feedback policy is updated as a function of the latest set of filter coefficients. The state estimations and matrix parameters of the controller are stored in a data structure. The LinearQuadraticRegulator type is instantiated and initialized once, before either of the loops begin execution.

// Represents a Linear Quadratic Regulator (LQR) model.

typedef struct

{

Mat12 W_n; // filter matrix

Mat12 P_n; // inverse autocorrelation matrix

Mat210 K_j; // feedback policy

Vec12 dataset; // (xₖ, uₖ)

Vec12 z_n; // z_n in RLS

Vec12 g_n; // g_n in RLS

Vec12 alpha_n; // alpha_n in RLS

float x_n_dot_z_n; // the inner product of the x_n (dataset) and z_n

int j; // step number

int k; // time k

int n; // xₖ ∈ ℝⁿ

int m; // uₖ ∈ ℝᵐ

float lambda; // exponential wighting factor

float delta; // value used to intialize P(0)

int active; // is the model controller active

float CPUClock; // the CPU clock

float dt; // period in seconds

float reactionDutyCycle; // reaction wheel's motor PWM duty cycle

float rollingDutyCycle; // rolling wheel's motor PWM duty cycle

float reactionDutyCycleChange; // the maximum incremental change in the reaction motor's duty cycle

float rollingDutyCycleChnage; // the maximum incremental change in the rolling motor's duty cycle

float clippingValue; // the clipping value for any of the P matrix elements at which the clipping is applied

float clippingFactor; // the coefficient by which the P matrix elements are rescaled through scalar multiplication

float rollSafetyAngle; // the roll angle in radian beyond which the controller must become deactive for safety

float pitchSafetyAngle; // the pitch angle in radian beyond which the controller must become deactive for safety

float kappa1; // tuning parameters to minimize estimate variance (the ratio between the accelerometer and the gyroscope in sensor fusion)

float kappa2; // tuning parameters to minimize estimate variance (the ratio between the accelerometer and the gyroscope in sensor fusion)

int maxEpisodeLength; // the maximum number of interactions with the nevironment before the model becomes deactive for safety

int logPeriod; // the period between printing two log messages in terms of control cycles

int logCounter; // the number of control cycles elpased since the last log message printing

int maxOutOfBounds; // the maximum number of consecutive cycles where states are out of the safety bounds

int outOfBoundsCounter; // the number of consecutive times when either of safety angles have been detected out of bounds

float alpha; // z-Euler angle (yaw)

float beta; // y-Euler angle (pitch)

float gamma; // x-Euler angle (roll)

float fusedBeta; // y-Euler angle (pitch) as the result of fusing the accelerometer sensor measurements with the gyroscope sensor measurements

float fusedGamma; // x-Euler angle (roll) as the result of fusing the accelerometer sensor measurements with the gyroscope sensor measurements

int noiseQuotient; // the quotient of the random number for generating the probing noise

float noiseScale; // the scale of by which the remainder of the probing noise is to be divided

float time; // the time that has elapsed since the start up of the microcontroller in seconds

float changes; // the magnitude of the changes to the filter coefficients after one step forward

float convergenceThreshold; // the threshold value of the changes to filter coefficients below which the RLS is assumed to be converged

int convergenceCounter; // the number of consecutive times that the changes to filter coefficients are less than the convergence threshold

int convergenceMaxCount; // the maximum number of consecutive times for the changes below the threshold to determine convergence

Mat34 Q; // The matrix of unknown parameters

Vec3 r; // the average of the body angular rate from rate gyro

Vec3 rDot; // the average of the body angular rate in Euler angles

Mat3 E; // a matrix transfom from body rates to Euler angular rates

Mat24 X; // The optimal fusion matrix

Mat32 Matrix; // all sensor measurements combined

Vec3 g; // The gravity vector

Mat2 Suu; // The input-input kernel

Mat2 SuuInverse; // the inverse of the input-input kernel

Mat210 Sux; // the input-state kernel

Vec2 u_k; // the input vector

IMU imu1; // the first inertial measurement unit

IMU imu2; // the second inertial measurement unit

Encoder reactionEncoder; // the reaction wheel encoder

Encoder rollingEncoder; // the rolling wheel encoder

CurrentSensor reactionCurrentSensor; // the reaction wheel's motor current sensor

CurrentSensor rollingCurrentSensor; // the rolling wheel's motor current sensor

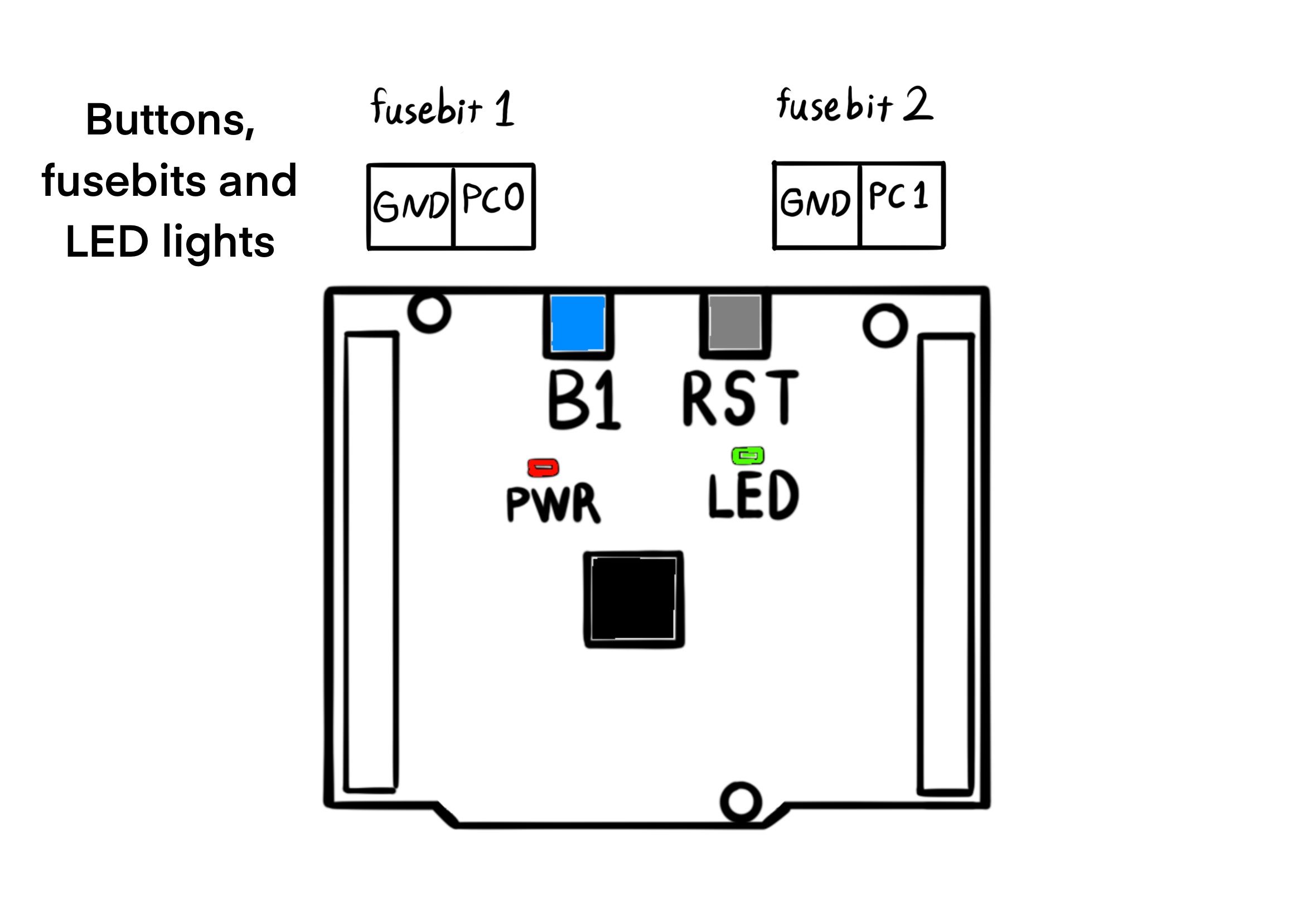

} LinearQuadraticRegulator;There are two fuse bits on the robot for configuration without flashing a program. The first one is connected to the port C of the general purpose input / output, pin 0. The fuse bit is active whenever the connected pin is grounded. The fuse bit deactivates the linear quadratic regulator by clearing the active field as a flag in the model structure. Even though the status of the fuse bit 0 is necessary to activate the model, it is not a sufficient condition. The user must connect the fuse bit and also push a blue push button once on the robot for activating the model. The push button is the same blue button that is found on the NUCLEOF401RE board. These two conditions are chained together for safety reasons. If the model is not active, then the robot must stop moving by calling the function resetActuators.

elapsedTime1 = DWT->CYCCNT;

if (HAL_GPIO_ReadPin(GPIOC, GPIO_PIN_0) == 0)

{

if (HAL_GPIO_ReadPin(GPIOC, GPIO_PIN_13) == 0)

{

HAL_GPIO_WritePin(GPIOA, GPIO_PIN_5, GPIO_PIN_RESET);

model.active = 1;

}

}

else

{

model.active = 0;

resetActuators(&model);

}When the reaction wheel unicycle falls over, the roll and pitch angles of the chassis with respect to the pivot point exceed ten degrees. It makes sense to disable the actuators after a fall has been detected to both save energy and minimize physical shock to gearboxes. The lower and upper bounds on the roll and pitch angles are combined using the logical "or" operator || with the episode counter so that the model stops running after the maximum number of interactions with the environment, the total steps in an episode. A fall or a certain number of interactions, whichever comes first, must cause the model to deactivate on its own. When the model is deactivated, the green light on the NUCLEOF401RE turns on to signify that the controller is no longer active. The user has four options whenever the green LED lights up:

Pick the robot up and make it stand upright, before pushing the blue push button to run again.

Switch the power button on the chassis to condition zero, in order to power off the robot.

Connect to the robot WiFi network and execute the following command in the terminal for printing the logs. Print

uart6serial messages by executing:nc 192.168.4.1 10000Activate the Porta.jl environment in a Julia REPL and then run the linked script for visualizing the logs: Unicycle

Since the robot is portable and has a feedback loop related to the motion of its body, violating the safety angle bounds does not immediately disable the model. Instead, the outOfBoundsCounter is incremented every time the safety conditions are violated and is decremented otherwise. Then the model is deactivated if the out of bounds counter is greater than maxOutOfBounds. This approach reduces the probability that a discontinous state estimation is able to trigger deactivation.

if (fabs(model.imu1.roll) > model.rollSafetyAngle || fabs(model.imu1.pitch) > model.pitchSafetyAngle || model.j > model.maxEpisodeLength)

{

model.outOfBoundsCounter = model.outOfBoundsCounter + 1;

}

else

{

model.outOfBoundsCounter = fmax(0, model.outOfBoundsCounter - 1);

}

if (model.outOfBoundsCounter > model.maxOutOfBounds)

{

model.active = 0;

HAL_GPIO_WritePin(GPIOA, GPIO_PIN_5, GPIO_PIN_SET);

}The microcontroller is built around a Cortex-M4 with Floating Point Unit (FPU) core, which contains hardware extensions for debugging features. The debug extensions allow the core to be stopped either on a given instruction fetch (breakpoint), or on data access (watchpoint). When stopped, the core's internal state and the system's external state may be examined. Once examination is complete, the core and the system may be restored and program execution resumed.

The ARM Cortex-M4 with FPU core provides integrated on-chip debug support. One of the debug features is called Data Watchpoint Trigger (DWT). The DWT unit provides a means to give the number of clock cycles. The DWT register CYCCNT counts the number of clock cycles. The period of a control loop is required in the application for integrating the gyroscopic angle rates. If we count the number of clocks twice: one time before the loop begins and one time after the loop ends, then we can find the time period that it takes to complete a control loop. In the beginning, we count the number of clocks by assigning the register value to a local variable called t1.

At the end of the control loop, where the model has taken one step forward, it is time to count the number of the processor's clock cycles for a second time for measuring delta t. At this point, by assigning the value of the DWT counter register to the variable t2 we can know how many cycles are there between t1 and t2. Then divide the difference by the number of Central Processing Unit (CPU) clock cycles per second cpuClock for finding the period of the control loop. The field dt of the model struct saves the control period.

If the model is set to active, then the controller takes one step forward. The function stepForward takes as argument a pointer to the model, mainly because two of its fields require persistent memory across runs: the filter matrix W_n and the inverse autocorrelation matrix P_n. The system state estimation is done by calling updateSensors. But since we extend the meaning of the value function to the quality function $Q(x, u)$, the states $x_k$ are appended by the inputs $u_k$, which in turn are computed by calling computeFeedbackPolicy. The function call applyFeedbackPolicy applies the action of the feedback policy, changing the angular velocity of the motors.

$Q(x, u) = Q(z) = W^T \phi(z)$

$x_k \in \mathbb{R^n}, \ u_k \in \mathbb{R^m}$

In the case where the model is active, the critic loop is run for a few times before the policy is updated by calling the updateControlPolicy function. However, when the model in not active, neither the critic loop nor the actor loop are executed. During the inactive mode of operation, the program makes measurements by calling the functions updateSensors and computeFeedbackPolicy, and resets the actuators by calling the function resetActuators.

if (model.active == 1)

{

t1 = DWT->CYCCNT;

updateSensors(&model);

computeFeedbackPolicy(&model);

applyFeedbackPolicy(&model);

stepForward(&model);

if (fabs(model.changes) < model.convergenceThreshold)

{

model.convergenceCounter = model.convergenceCounter + 1;

}

else

{

model.convergenceCounter = 0;

}

if (model.convergenceCounter >= model.convergenceMaxCount)

{

updateControlPolicy(&model);

}

model.logCounter = model.logCounter + 1;

t2 = DWT->CYCCNT;

diff = t2 - t1;

model.dt = (float)diff / model.CPUClock;

}

else

{

model.logPeriod = 80;

t1 = DWT->CYCCNT;

resetActuators(&model);

updateSensors(&model);

computeFeedbackPolicy(&model);

model.logCounter = model.logCounter + 1;

t2 = DWT->CYCCNT;

diff = t2 - t1;

model.dt = (float)diff / model.cpuClock;

}In order to monitor the controller and debug issues, we write the logs periodically to the standard input / output console. The variable log_counter is incremented by one every control cycle. Then, the log counter variable logCounter is compared to the constnt logPeriod for finding out if a cycle should be logged. But it is not a sufficient condition for logging, because a second fuse bit is also rquired for permission to log. The second fuse bit is connected to the port C of the general purpose input / output, pin 1. Whenever the fuse bit pin is grounded, it is activated. Once the fuse bit is active, the local transmission flag transmit is set at the relevant control cycle count. The reason for logPeriod is to limit the total number of logs per second, as the Micro-Controller Unit (MCU) is too fast for a continuous report. And the second fuse bit is there to turn off logging for saving time, as log transmission takes time away from the control processes. So by using the logical "and" operator && we can combine the logging period condition with the logging fuse bit, in order to manage the frequnecy of transmissions.

if (model.logCounter > model.logPeriod && HAL_GPIO_ReadPin(GPIOC, GPIO_PIN_1) == 0)

{

transmit = 1;

}If the transmit variable is equal to one, then the log counter logCounter is cleard along with the transmit variable, before transmission. The function sprintf is called with a message buffer MSG and a formatted string to populate the buffer with numbers. A log message can be anything, but for finding the matrix of known parameters in tilt estimation, the accelerometrs data must be included. After the message is composed, it is given as an argument to the function HAL_UART_Transmit, which stands for: Hardware Abstraction Layer, Universal Asynchronous Receiver / Transmitter, Transmit. The function also requires a pointer to uart6, which is a micro-controller peripheral for serial communications, and the size of the message buffer, along with a time out delay. Printing and transmitting the log finishes the actor loop. The console on the other side of tranmission should receive a line like this: AX1: -0.01, AY1: 1.03, AZ1: 0.00, | AX2: -0.05, AY2: 0.97, AZ2: -0.04, | roll: 0.03, pitch: -1.59, | encT: 0.46, encB: 4.31, | j: 1038.000000, | x0: -0.02, x1: -0.12, x2: 0.03, x3: -1.22, x4: -0.90, x5: 0.05, x6: 0.09, x7: -0.04, x8: 0.00, x9: -0.01, x10: 0.00, x11: 0.00, | P0: -96.08, P1: 0.27, P2: 2.04, P3: 1.18, P4: 1.36, P5: 0.34, P6: 0.03, P7: 0.77, P8: 0.08, P9: 0.57, P10: 62.48, P11: 62.48, dt: 0.001947.

You can visualize this example message using the Unicycle script. The example includes: the tri-axis acceleromer measurements of IMU 1 and IMU 2, the roll and pitch angles after the primary and secondary sensor fusions, the absolute position of both rotary encoders, and the diagonal entries of the inverse auto-correlation matrix P_n. Different messages can be composed for different use cases, for example printing raw sensor readings for calibrating the zero point and the scale of the accelerometers axes.

if (transmit == 1)

{

t1 = DWT->CYCCNT;

transmit = 0;

model.logCounter = 0;

sprintf(MSG,

"active: %0.1f, changes: %0.2f, | AX1: %0.2f, AY1: %0.2f, AZ1: %0.2f, | AX2: %0.2f, AY2: %0.2f, AZ2: %0.2f, | roll: %0.2f, pitch: %0.2f, yaw: %0.2f, | encT: %0.2f, encB: %0.2f, | j: %0.1f, k: %0.1f, | P0: %0.2f, P1: %0.2f, P2: %0.2f, P3: %0.2f, P4: %0.2f, P5: %0.2f, P6: %0.2f, P7: %0.2f, P8: %0.2f, P9: %0.2f, P10: %0.2f, P11: %0.2f, time: %0.2f, dt: %0.6f\r\n",

(float)model.active, model.changes, model.imu1.accX, model.imu1.accY, model.imu1.accZ, model.imu2.accX, model.imu2.accY, model.imu2.accZ, model.fusedBeta, model.fusedGamma, model.alpha, model.reactionEncoder.radianAngle, model.rollingEncoder.radianAngle, (float)model.j, (float)model.k, getIndexMat12(model.P_n, 0, 0), getIndexMat12(model.P_n, 1, 1), getIndexMat12(model.P_n, 2, 2), getIndexMat12(model.P_n, 3, 3), getIndexMat12(model.P_n, 4, 4), getIndexMat12(model.P_n, 5, 5), getIndexMat12(model.P_n, 6, 6), getIndexMat12(model.P_n, 7, 7), getIndexMat12(model.P_n, 8, 8), getIndexMat12(model.P_n, 9, 9), getIndexMat12(model.P_n, 10, 10), getIndexMat12(model.P_n, 11, 11), model.time, model.dt);

HAL_UART_Transmit(&huart6, MSG, sizeof(MSG), 1000);

t2 = DWT->CYCCNT;

diff = t2 - t1;

model.dt += (float)diff / model.CPUClock;

}

// Rinse and repeat :)

elapsedTime2 = DWT->CYCCNT;

elapsedTime = elapsedTime2 - elapsedTime1;

model.time += (float)elapsedTime / model.CPUClock;In order to enable the function sprintf to use floating point numbers, do the following steps:

- Open the file

gcc-arm-none-eabi.cmakethat is created by CubeMX. - Add the option

-u _printf_floattoCMAKE_C_FLAGS.

Set the baudrate of uart6 to 921600, for the wifi module HC-25. The HC-25 module settings are on the IP address 192.168.4.1 as a web page. Here is the checklist to set up a new module:

- The password is not set for new modules. So just login without a password to access the settings page.

- Set a username and password for the robot's access point.

- Set the WiFi Mode to AP for Access Point.

- Change the port number from 8080 to 10000.

- Set the Baud Rate parameter to 921600 Bits/s.

Step Forward

The function stepForward identifies the Q function using RLS with the given pointer to the model. The algorithm updates the Q function at each step. As a result, the filter matrix W_n and the inverse auto-correlation matrix P_n are updated. Performs a one-step update in the parameter vector $W$ by applying RLS to the equation:

$W_{j + 1}^T (\phi(z_k) - \gamma \phi(z_{k + 1})) = r(x_k, h_j(x_k))$

$W_{j + 1}^T (\phi(z_k) - \phi(z_{k + 1})) = \frac{1}{2} (x_k^T Q x_k + u_k^T R u_k)$